Adopters Manual

The Samples

The quickest way to get started building with EDC is to work through

the samples. The samples cover everything from basic scenarios involving

sharing files to advanced streaming and large data use cases.

The MVD

The EDC Minimal Viable Dataspace (MVD) sets up and runs a

complete demonstration dataspace between two organizations. The MVD includes automated setup of a complete dataspace

environment in a few minutes.

Overview: Key Components

EDC is architected as modules called extensions that can be combined and customized to create components

that perform specific tasks. These components (the “C” in EDC) are not what is commonly referred to as "

microservices." Rather, EDC components may be deployed as separate services or collocated in a runtime process. This

section provides a quick overview of the key EDC components.

The Connector

The Connector is a pair of components that control data sharing and execute data transfer. These components are the

Control Plane and Data Plane, respectively. In keeping with EDC’s modular design philosophy, connector

components may be deployed in a single monolith (for simple use cases) or provisioned as clusters of individual

services. It is recommended to separate the Control Plane and Data Plane so they can be individually managed and scaled.

The Control Plane

The Control Plane is responsible for creating contract agreements that grant access to data, managing data transfers,

and monitoring usage policy compliance. For example, a data consumer’s control Plan initiates a contract negotiation

with a data provider’s connector. The negotiation is an asynchronous process that results in a contract agreement if

approved. The consumer connector then uses the contract agreement to initiate a data transfer with the provider

connector. A data transfer can be a one-shot (finite) transfer, such as a discrete set of data, or an ongoing (

non-finite) data stream. The provider control plane can pause, resume, or terminate transfers in response to certain

conditions. For example, if a contract agreement expires.

The Data Plane

The Data Plane is responsible for executing data transfers, which are managed by the Control Plane. A Data Plane sends

data using specialized technology such as a messaging system or data integration platform. EDC includes the Data Plane

Framework (DPF) for building custom Data Planes. Alternatively, a Data Plane can be built using other languages or

technologies and integrated with the EDC Control Plane by implementing

the Data Plane Signaling API.

Federated Catalog

The Federated Catalog (FC) is responsible for crawling and caching data catalogs from other participants. The FC builds

a local cache that can be queried or processed without resorting to complex distributed queries across multiple

participants.

Identity Hub

The Identity Hub securely stores and manages W3C Verifiable Credentials, including the presentation of VCs and the

issuance and re-issuance process.

The Big Picture: The Dataspace Context

EDC components are deployed to create a dataspace ecosystem. It is important to understand that there is no such thing

as “dataspace software.” At its most basic level, a dataspace is simply a context between two participants:

The Federated Catalog fetches data catalogs from other participants. A Connector negotiates a contract agreement

for data access between two participants and manages data transfers using a data plane technology. The Identity Hub

presents verifiable credentials that a participant connector uses to determine whether it trusts and should grant data

access to a counterparty.

The above EDC components can be deployed in a single runtime process (e.g., K8S ReplicaSet) or a distributed topology (

multiple ReplicaSets or clusters). The connector components can be further decomposed. For example, multiple control

plane components can be deployed within an organization in a federated manner where departments or subdivisions manage

specific instances termed Management Domains.

Customizing the EDC

EDC was designed with the philosophy that one size does not fit all. Before deploying an EDC-powered data sharing

ecosystem, you’ll need to build customizations and bundle them into one or more distributions. Specifically:

- Policies - Create a set of policies for data access and usage control. EDC adopts a code-first approach, which

involves writing policy functions.

- Verifiable Credentials - Define a set of W3C Verifiable Credentials for your use cases that your policy functions

can process. For example, a credential that identifies a particular partner type.

- Data transfer types - Define a set of data transfer technologies or types that must be supported. For example,

choose out-of-the-box support for HTTP, S3-based transfers, or Kafka. Alternatively, you can select your preferred

wire protocol and implement a custom data plane.

- Backend connectivity - You may need to integrate EDC components with back-office systems. This is done by writing

custom extensions.

Third parties and other open source projects distribute EDC extensions that can be included in a distribution. These

will typically be hosted on Maven Central.

1 - Dataspaces

A brief introduction to what a dataspace is and how it relates to EDC.

The concept of a dataspace is the starting point for learning about the EDC. A dataspace is a context between one or more participants that share data. A participant is typically an organization, but it could be any entity, such as a service or machine.

Dataspace Protocol (DSP): The Lingua Franca for Data Sharing

The messages exchanged in a dataspace are defined by the Dataspace Protocol Specification (DSP). EDC implements and builds on these asynchronous messaging patterns, so it will help to become acquainted with the specification. DSP defines how to retrieve data catalogs, conduct negotiations to create contract agreements that grant access to data, and send data over various lower-level wire protocols. While DSP focuses on the messaging layer for controlling data access, it does not specify how “trust” is established between participants. By trust, we mean on what basis a provider makes the decision to grant access to data, for example, by requiring the presentation of verifiable credentials issued by a third-party. This is specified by the Decentralized Claims Protocol (DCP), which layers on DSP. We won’t cover the two specifications here, other than to highlight a few key points that are essential to understanding how EDC works.

After reading this document, we recommend consulting the DSP and DCP specifications for further information.

The Question of Identity

One of the most important things to understand is how identities work in a dataspace and EDC. A participant has a single identity, which is a URI. EDC supports multiple identity systems, including OAuth2 and the Decentralized Claims Protocol (DCP). If DCP is used, the identity will be a Web DID.

An EDC component, such as a control plane, acts as a participant agent; in other words, it is a system that runs on behalf of a participant. Therefore, each component will use a single identity. This concept is important and nuanced. Let’s consider several scenarios.

Simple Scenarios

Single Deployment

An organization deploys a single-instance control plane. This is the simplest possible setup, although it is not very reliable or scalable. In this scenario, the connector has exactly one identity. Now take the case where an organization decides on a more robust deployment with multiple control plane instances hosted as a Kubernetes ReplicaSet. The control plane instances still share the same identity.

Distributed Deployment

EDC supports the concept of management domains, which are realms of control. If different departments want to manage EDC components independently, the organization can define management domains where those components are deployed. Each management domain can be hosted on distinct Kubernetes clusters and potentially run in different cloud environments. Externally, the organization’s EDC infrastructure appears as a unified whole, with a single top-level catalog containing multiple sub-catalogs and data sharing endpoints.

In this scenario, departments deploy their own control plane clusters. Again, each instance is configured with the same identity across all management domains.

Multiple Operating Units

In some dataspaces, a single legal entity may have multiple subdivisions operating independently. For example, a multinational may have autonomous operating units in different geographic regions with different data access rights. In this case, each operating unit is a dataspace participant with a distinct identity. EDC components deployed by each operating unit will be configured with different identities. From a dataspace perspective, each operating unit is a distinct entity.

Common Misconceptions

Data transfers are only about sending static files

Data can be in a variety of forms. While the EDC can share static files, it also supports open-ended transfers such as streaming and API access. For example, many EDC use cases involve providing automated access to event streams or API endpoints, including pausing or terminating access based on continual evaluation of data use policies.

Dataspace software has to be installed

There is no such thing as dataspace “software” or a dataspace “application.” A dataspace is a decentralized context. Participants deploy the EDC and communicate with other participant systems using DSP and DCP.

EDC adds a lot of overhead

EDC is designed as a lightweight, non-resource-intensive engine. EDC adds no overhead to data transmission since specialized wire protocols handle the latter. For example, EDC can be used to grant access to an API endpoint or data stream. Once access is obtained, the consumer can invoke the API directly or subscribe to a stream without requiring the request to be proxied through EDC components.

Cross-dataspace communication vs. interoperability

There is no such thing as cross-dataspace communication. All data sharing takes place within a dataspace. However, that does not mean there is no such thing as dataspace interoperability. Let’s unpack this.

Consider two dataspaces, DS-1 and DS-2. It’s possible for a participant P-A, a member of DS-1, to share data with P-B, a member of DS-2, under one of the following conditions:

- P-A is also a member of DS-2, or

- P-B is also a member of DS-1

P-A shares data with P-B in the context of DS-1 or DS-2. Data does not flow between DS-1 and DS-2. It’s possible for one EDC instance to operate within multiple dataspaces as long as its identity remains the same (if not, different EDC deployments will be needed).

Interoperability is different. Two dataspaces are interoperable if:

- They have compatible identity systems. For example, if both dataspaces use DCP and Web DIDs, or a form of OAuth2 with federation between the Identity Providers.

- They have a common set of verifiable credentials (or claims) and credential issuers.

- They have an agreed set of data sharing policies.

If these conditions are met, it is possible for a single connector deployment to participate in two dataspaces.

2 - Modules, Runtimes, and Components

An overview of the EDC modularity system.

EDC is built on a module system that contributes features as extensions to a runtime. Runtimes are assembled to create a

component such as a control plane, a data plane, or an identity hub. A component may be composed of a single runtime

or a set of clustered runtimes:

The EDC module system provides a great deal of flexibility as it allows you to easily add customizations and target

diverse deployment topologies from small-footprint single-instance components to highly reliable, multi-cluster setups.

The documentation and samples cover in detail how EDC extensions are implemented and configured. At this point, it’s

important to remember that extensions are combined into one or more runtimes, which are then assembled into components.

A Note on Identifiers

The EDC uses identifiers based on this architecture. There are three identifier types: participant IDs, component IDs,

and runtime IDs. A participant ID corresponds to the organization’s identifier in a dataspace. This will vary by dataspace

but is often a Web DID. All runtimes of all components operated by an organization - regardless of where they are deployed

- use the same participant ID.

A component ID is associated with a particular component, for example, a control plane or data plane deployment. If an

organization deploys two data planes across separate clusters, they will be configured with two distinct component IDs.

All runtimes within a component deployment will share the same component ID. Component IDs are permanent and survive runtime

restarts.

A runtime ID is unique to each runtime instance. Runtime IDs are ephemeral and do not survive restarts. EDC uses runtime IDs to acquire cluster locks and for tracing, among other things.

3 - Control Plane

Explains how data, policies, access control, and transfers are managed.

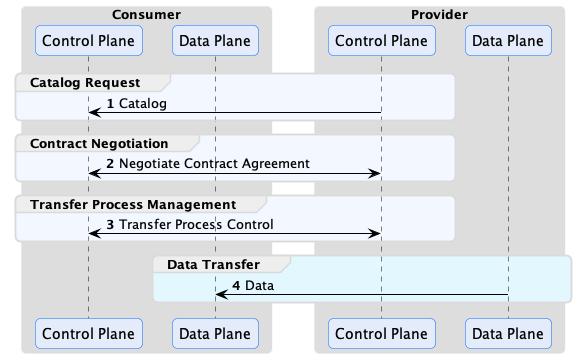

The control plane is responsible for assembling catalogs, creating contract agreements that grant access to data, managing data transfers, and monitoring usage policy compliance. Control plane operations are performed by interacting with the Management API. Consumer and provider control planes communicate using the Dataspace Protocol (DSP). This section provides an overview of how the control plane works and its key concepts.

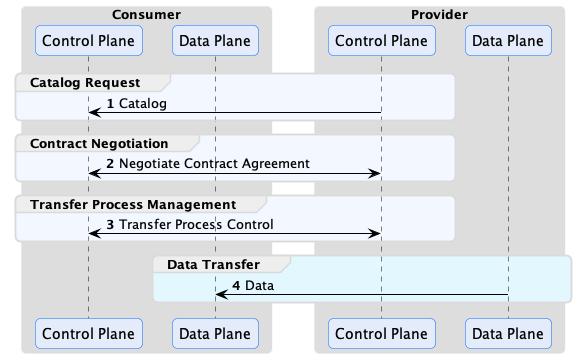

The main control plane operations are depicted below:

The consumer control plane requests catalogs containing data offers, which are then used to negotiate contract agreements. A contract agreement is an artifact that acts as a token granting access to a data set. It encodes a set of usage policies (as ODRL) and is bound to the consumer via its Participant ID. Every control plane must be configured with a Participant ID, which is the unique identifier of the dataspace participant operating it. The exact type of identifier is dataspace-specific but will often be a Web DID if the Decentralized Claims Protocol (DCP) is used as the identity system.

After obtaining a contract agreement, the consumer can initiate a data transfer. A data transfer controls the flow of data, but it does not send it. That task is performed by the consumer and provider data planes using a separate wire protocol. Data planes are typically specialized technology, such as a messaging system or data integration platform, deployed separately from the control plane. A control plane may use multiple data planes and communicate with them via a RESTful interface called the Data Plane Signaling API.

EDC is designed to handle all general forms of data. It’s important to note that a data transfer does not need to be file-based. It can be a stream, such as a market feed or an API that a client queries. Moreover, a data transfer does not need to be completed. It can exist indefinitely and be paused and resumed by the control plane at intervals. Now, let’s jump into the specifics of how the control plane works, starting briefly with the Management API and proceeding to catalogs.

Management API

The Management API is a RESTful interface for client applications to interact with the control plane. All client

operations described in this section use the Management API. We won’t cover the API in detail here since there is an

OpenAPI definition. The API can be secured using an authentication key or third-party OAuth2 identity provider, but it

is important to note that it should never be exposed over the Internet or other non-trusted networks.

The openapi documentation is available following this link:

https://eclipse-edc.github.io/Connector/openapi/management-api/

Catalogs, Datasets, and Offers

A data provider uses its control plane to publish a data catalog that other dataspace participants access. Catalog requests are made using DSP (HTTP POST). The control plane will return a response containing a DCAT Catalog The following is an example response with some sections omitted for brevity:

{

"@context": {...},

"dspace:participantId": "did:web:example.com",

"@id": "567bf428-81d0-442b-bdc8-437ed46592c9",

"@type": "dcat:Catalog",

"dcat:dataset": [

{

"@id": "asset-1",

"@type": "dcat:Dataset",

"description": "...",

"odrl:hasPolicy": {...},

"dcat:distribution": [{...}]

}

]

}

Catalogs contain Datasets, which represent data the provider wishes to make available to the requesting client. A Dataset has two important properties: odrl:hasPolicy, which is an ODRL usage policy, and one or more dcat:distribution entries that describe how to obtain the data. The catalog is serialized as JSON-LD. It is highly recommended that you become familiar with JSON-LD, and in particular, the JSON-LD Playground, since EDC makes heavy use of it.

Why does EDC use JSON-LD instead of plain JSON? There are two reasons. First, DSP is based on DCAT and ODRL, which rely on JSON-LD. As you will see, many EDC entities can be extended with custom attributes added by end-users. EDC needed a way to avoid property name clashes. JSON-LD provides the closest thing to a namespace feature for plain JSON.

Catalogs are not static documents. When a data consumer requests a catalog from a provider, the provider’s control plane dynamically generates a response based on the consumer’s identity and credentials. For example, a provider may offer specific datasets to a consumer or category of consumer (for example, if it is a tier-1 or tier-2 partner).

You will learn more about restricting access to datasets in the next section, but one way to do so is through the offer associated with a dataset. The following odrl:hasPolicy contains an Offer that specifies a dataset can only be used by an accredited manufacturer:

"odrl:hasPolicy": {

"@id": "...",

"@type": "odrl:Offer",

"odrl:obligation": {

"odrl:action": {

"@id": "use"

},

"odrl:constraint": {

"odrl:leftOperand": {

"@id": "ManufacturerAccredidation"

},

"odrl:operator": {

"@id": "odrl:eq"

},

"odrl:rightOperand": "active"

}

}

},

An offer defines usage policy. Usage policies are the requirements and permissions - or, more precisely, the duties, rights, and obligations - a provider imposes on a consumer to grant access to data. In the example above, the provider requires the consumer to be an accredited manufacturer. In practice, policies translate down into checks and verifications at runtime. When a consumer issues a catalog request, it will supply its identity (e.g., a Web DID) and potentially a set of Verifiable Presentations (VP). The provider control plane could check for a valid VP, or perform a back-office system lookup based on the client identity. Assuming the check passes, the dataset will be included in the catalog response.

A dataset will also be associated with one or more dcat:distributions:

"dcat:distribution": [

{

"@type": "dcat:Distribution",

"dct:format": {

"@id": "HttpData-PULL"

},

"dcat:accessService": {

"@id": "a6c7f3a3-8340-41a7-8154-95c6b5585532",

"@type": "dcat:DataService",

"dcat:endpointDescription": "dspace:connector",

"dcat:endpointUrl": "http://localhost:8192/api/dsp",

"dct:terms": "dspace:connector",

"dct:endpointUrl": "http://localhost:8192/api/dsp"

}

},

{

"@type": "dcat:Distribution",

"dct:format": {

"@id": "S3-PUSH"

},

"dcat:accessService": {

"@id": "a6c7f3a3-8340-41a7-8154-95c6b5585532",

"@type": "dcat:DataService",

"dcat:endpointDescription": "dspace:connector",

"dcat:endpointUrl": "http://localhost:8192/api/dsp",

"dct:terms": "dspace:connector",

"dct:endpointUrl": "http://localhost:8192/api/dsp"

}

}

]

A distribution describes the wire protocol a dataset is available over. In the above example, the dataset is available using HTTP Pull and S3 Push protocols (specified by the dct:format property). You will learn more about the differences between these protocols later. A distribution will be associated with a dcat:accessService, which is the endpoint where a contract granting access can be negotiated.

If you would like to understand the structure of DSP messages in more depth, we recommend looking at the JSON schemas and examples provided by the Dataspace Protocol Specification (DSP).

EDC Entities

So far, we have examined catalogs, datasets, and offers from the perspective of DSP messages. We will now shift focus to the primary EDC entities used to create them. EDC entities do not have a one-to-one correspondence with DSP concepts, and the reason for this will become apparent as we proceed.

Assets

An Asset is the primary building block for data sharing. An asset represents any data that can be shared. An asset is not limited to a single file or group of files. An asset could be a continual stream of data or an API endpoint. An asset does not even have to be physical data. It could be a set of computations performed at a later date. Assets are data descriptors loaded into EDC via its Management API (more on that later). Notice the emphasis on “descriptors”: assets are not the actual data to be shared but describe the data. The following excerpt shows an asset:

{

"@context": {

"edc": "https://w3id.org/edc/v0.0.1/ns/"

},

"@id": "899d1ad0-532a-47e8-2245-1aa3b2a4eac6",

"properties": {

"somePublicProp": "a very interesting value"

},

"privateProperties": {

"secretKey": "..."

},

"dataAddress": {

"type": "HttpData",

"baseUrl": "http://localhost:8080/test"

}

}

When a client requests a catalog, the control plane processes its asset entries to create datasets in a DSP catalog. An asset must have a globally unique ID. We strongly recommend using the JDK UUID implementation. Entries under the properties attribute will be used to populate dataset properties. The properties attribute is open-ended and can be used to add custom fields to datasets. Note that several well-known

properties are included in the edc namespace: id, description, version, name, contenttype (more on this in the next section on asset expansion).

In contrast, the privateProperties attribute contains properties that are not visible to clients (i.e., they will not be serialized in DSP messages). They can be used to internally tag and categorize assets. As you will see, tags are useful to select groups of assets in a query.

Why is the term Asset used and not Dataset? This is mostly for historical reasons since the EDC was originally designed before the writing of the DSP specification. However, it was decided to keep the two distinct since it provides a level of decoupling between the DSP and internal layers of EDC.

Remember that assets are just descriptors - they do not contain actual data. How does EDC know where the actual data is stored? The dataAddress object acts as a pointer to where the actual data resides. The DataAddress type is open-ended. It could point to an HTTP address (HttpDataAddress), S3 bucket (S3DataAddress), messaging topic, or some other form of storage. EDC supports a defined set of storage types. These can be extended to include support for virtually any custom storage. While data addresses can contain custom data, it’s important not to include secrets since data addresses are persisted. Instead, use a secure store for secrets and include a reference to it in the DataAddress.

Understanding Expanded Assets

The @context property on an asset indicates that it is a JSON-LD type. JSON-LD (more precisely, JSON-LD terms) is used by EDC to enable namespaces for custom properties. The following excerpt shows an asset with a custom property, dataFeed:

{

"@context": {

"edc": "https://w3id.org/edc/v0.0.1/ns/",

"market-systems": "http://w3id.org/market-systems/v0.0.1/ns/"

},

"@id": "...",

"properties": {

"dataFeed": {

"feedName": "Market Data",

"feedType": "PRICING",

"feedFrequency": "DAILY"

}

}

}

Notice a reference to the market-systems context has been added to @context in the above example. This context defines the terms dataFeed, feedName, feedType, and feedFrequency. When the asset is added to the control plane via the EDC’s Management API, it is expanded according to the JSON expansion algorithm This is essentially a process of inlining the full term URIs into the JSON structure. The resulting JSON will look like this:

{

"@id": "...",

"https://w3id.org/edc/v0.0.1/ns/properties": [

{

"http://w3id.org/market-systems/v0.0.1/ns/dataFeed": [

{

"http://w3id.org/market-systems/v0.0.1/ns/feedName": [

{

"@value": "Market Data"

}

],

"http://w3id.org/market-systems/v0.0.1/ns/feedType": [

{

"@value": "PRICING"

}

],

"http://w3id.org/market-systems/v0.0.1/ns/feedFrequency": [

{

"@value": "DAILY"

}

]

}

]

}

]

}

Be careful when defining custom properties. If you forget to include a custom context and use simple property names (i.e., names that are not prefixed or a URI), they will be expanded using the EDC default context, https://w3id.org/edc/v0.0.1/ns/.

EDC persists the asset in expanded form. As will be shown later, queries for assets must reference property names in their expanded form.

Policies and Policy Definitions

Policies are a generic way of defining a set of duties, rights, or obligations. EDC and DSP express policies with ODRL. EDC uses policies for the following:

- As a dataset offer in a catalog to define the requirements to access data

- As a contract agreement that grants access to data

- To enable access control

Policies are loaded into EDC via the Management API using a policy definition, which contains an ODRL policy type:

{

"@context": {

"edc": "https://w3id.org/edc/v0.0.1/ns/"

},

"@type": "PolicyDefinition",

"policy": {

"@context": "http://www.w3.org/ns/odrl.jsonld",

"@id": "8c2ff88a-74bf-41dd-9b35-9587a3b95adf",

"duty": [

{

"target": "http://example.com/asset:12345",

"action": "use",

"constraint": {

"leftOperand": "headquarter_location",

"operator": "eq",

"rightOperand": "EU"

}

}

]

}

}

A policy definition allows the policy to be referenced by its @id when specifying the usage requirements for a set of assets or access control. Decoupling policies in this way allows for a great deal of flexibility. For example, specialists can create a set of corporate policies that are reused across an organization.

Contract Definitions

Contract definitions link assets and policies by declaring which policies apply to a set of assets. Contract definitions contain two types of policy:

- Contract policy

- Access policy

Contract policy determines what requirements a data consumer must fulfill and what rights it has for an asset. Contract policy corresponds directly to a dataset offer. In the previous example, a contract policy is used to require a consumer to be an accredited manufacturer. Access policy determines whether a data consumer can access an asset. For example, if a data consumer is a valid partner. The difference between contract and access policy is visibility: contract policy is communicated to a consumer via a dataset offer in a catalog, while access policy remains “hidden” and is only evaluated by the data provider’s runtime.

Now, let’s examine a contract definition:

{

"@context": {

"edc": "https://w3id.org/edc/v0.0.1/ns/"

},

"@type": "https://w3id.org/edc/v0.0.1/ns/ContractDefinition",

"@id": "test-id",

"edc:accessPolicyId": "access-policy-1234",

"edc:contractPolicyId": "contract-policy-5678",

"edc:assetsSelector": [

{

"@type": "https://w3id.org/edc/v0.0.1/ns/Criterion",

"edc:operandLeft": "id",

"edc:operator": "in",

"edc:operandRight": ["id1", "id2", "id3"]

},

{

"@type": "https://w3id.org/edc/v0.0.1/ns/Criterion",

"edc:operandLeft": "productCategory",

"edc:operator": "=",

"edc:operandRight": "gold"

},

]

}

The accessPolicyId and contractPolicyId properties refer to policy definitions. The assetsSelector property is a query (similar to a SQL SELECT statement) that returns a set of assets the contract definition applies to. This allows users to associate policies with specific assets or types of assets.

Since assetsSelectors are late-bound and evaluated at runtime, contract definitions can be created before assets exist. This is a particularly important feature since it allows data security to be put in place prior to loading a set of assets. It also enables existing policies to be applied to new assets.

Catalog Generation

We’re now in a position to understand how catalog generation in EDC works. When a data consumer requests a catalog from a provider, the latter will return a catalog result with datasets that the former can access. Catalogs are specific to the consumer and dynamically generated at runtime based on client credentials.

When a data consumer makes a catalog request via DSP, it will send an access token that provides access to the consumer’s verifiable credentials in the form of a verifiable presentation (VP). We won’t go into the mechanics of how the provider obtains a VP - that is covered by DCP and the EDC IdentityHub. When the provider receives the request, it generates a catalog containing datasets using the following steps:

The control plane first retrieves contract definitions and evaluates their access and contract policies against the consumer’s set of claims. These claims are populated from the consumer’s verifiable credentials and any additional data provided by custom EDC extensions. A custom EDC extension could look up claims such as partner tier in a back-office system. Next, the assetsSelector queries from each passing contract definition is then evaluated to return a list of assets. These assets are iterated, and a dataset is created by combining the asset with the contract policy specified by the contract definition. The datasets are then collected into a catalog and returned by the client. Note that a single asset may result in multiple datasets if more than one contract definition selects it.

Careful consideration needs to be taken when designing contract definitions, particularly the level of granularity at which they operate. When a catalog request is made, The access and contract policies of all contract definitions are evaluated, and the passing ones are selected. The asset selector queries are then run from the resulting set. To optimize catalog generation, contract definitions should select groups of assets rather than correspond in a 1:1 relationship with an asset. In other words, limit contract definitions to a reasonable number and use them as a mechanism to filter groups of assets. Adding custom asset properties that serve as selection labels is an easy way to do this.

Contract Negotiations

Once a consumer has received a catalog, it can request access to a dataset by sending a DSP contract negotiation request using the Management API. The contract negotiation takes the dataset offer as a parameter. When the request is received, the provider will respond with an acknowledgment. Contract negotiations are asynchronous, which means they are not completed immediately but sometime in the future. A contract negotiation progresses through a series of states defined by the DSP specification (which we will not cover). Both the consumer and provider can transition the negotiation. When a transition is attempted, the initiating control plane sends a DSP message to the counterparty.

If a negotiation is successfully completed (termed finalized), a DSP contract agreement message is sent to the consumer. The message contains a contract agreement that can be used to access data by opening a transfer process:

{

"@context": "https://w3id.org/dspace/2024/1/context.json",

"@type": "dspace:ContractAgreementMessage",

"dspace:providerPid": "urn:uuid:a343fcbf-99fc-4ce8-8e9b-148c97605aab",

"dspace:consumerPid": "urn:uuid:32541fe6-c580-409e-85a8-8a9a32fbe833",

"dspace:agreement": {

"@id": "urn:uuid:e8dc8655-44c2-46ef-b701-4cffdc2faa44",

"@type": "odrl:Agreement",

"odrl:target": "urn:uuid:3dd1add4-4d2d-569e-d634-8394a8836d23",

"dspace:timestamp": "2023-01-01T01:00:00Z",

"odrl:permission": [{

"odrl:action": "odrl:use" ,

"odrl:constraint": [{

"odrl:leftOperand": "odrl:dateTime",

"odrl:operand": "odrl:lteq",

"odrl:rightOperand": { "@value": "2023-12-31T06:00Z", "@type": "xsd:dateTime" }

}]

}]

},

"dspace:callbackAddress": "https://example.com/callback"

}

EDC implements DSP message exchanges using a reliable quality of service. That is, all message operations and state machine transitions are performed reliably in a transaction context. EDC will only commit a state machine transition if a message is successfully acknowledged by the counterparty. If a send operation fails, the associated transition will be rolled back, and the message will be resent. As with all reliable messaging systems, EDC operations are idempotent.

Working with Asynchronous Messaging and Events

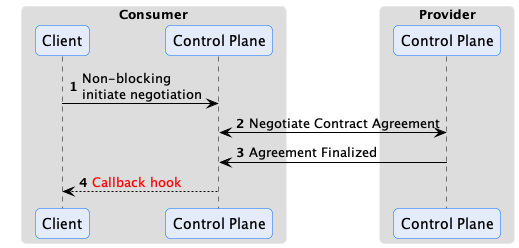

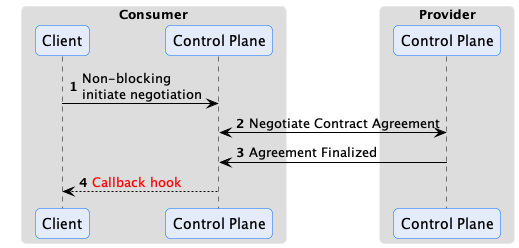

DSP and EDC are based on asynchronous messaging, and it is important to understand that and design your systems appropriately. One anti-pattern is to try to “simplify” EDC by creating a synchronous API that wraps the underlying messaging and blocks clients until a contract negotiation is complete. Put simply, don’t do that, as it will result in complex, inefficient, and incorrect code that will break EDC’s reliability guarantees. The correct way to interact with EDC and the control plane is expressed in the following sequence diagram:

EDC has an eventing system that code can plug into and receive events when something happens via a callback hook. For example, a contract negotiation is finalized. The EventRouter is used by extension code to subscribe to events. Two dispatch modes are supported: asynchronous notification or synchronous transactional notification. The latter mode can be used to reliably deliver the event to an external destination such as a message queue, database, or remote endpoint. Integrations will often take advantage of this feature by dispatching contract negotiation finalized events to another system that initiates a data transfer.

Reliable Messaging

EDC implements reliable messaging for all interactions, so it is important to understand how this quality of service works. First, all messages have a unique ID and are idempotent. If a particular message is not acknowledged, it will be resent. Therefore, it is expected the receiving endpoint will perform de-duplication (which all EDC components do). Second, reliable messaging works across restarts. For example, if a runtime crashes before it can send a response, the response will be sent either by another instance (if running in a cluster) or by the runtime when it comes back online. Reliability is achieved by recording the state of all interactions using state machines to a transactional store such as Postgres. State transitions are initiated in the context of a transaction by sending a message to the counterparty, which is only committed after an acknowledgment is received.

Transfer Processes

After a Contract Negotiation has been finalized, a consumer can request data associated with an asset by initiating a Transfer Process via the Management API.

A finite transfer process completes after the data, such as a file, has been transferred. Other types of data transfers, such as a data stream or access to an API endpoint, may be ongoing. These types of transfer processes are termed non-finite because there is no specified completion point. They continue until they are explicitly terminated or canceled.

Pay careful attention to how data is modeled. In particular, model your assets in a way that minimizes the number of Contract Negotiations and Transfer Processes that need to be created. For large data sets such as machine-learning data, this is relatively straightforward: an asset can represent individual data set. Consumers will typically need to transfer the data once or infrequently, so the number of Contract Negotiations and Transfer Processes will remain small, typically one Contract Negotiation and a few transfers.

Now, let’s take as an example a supplier that wishes to expose parts data to their partners. Do not model each part as a separate asset, as that would require at least one contract negotiation and transfer process per part. If there are millions of parts, the number of contract negotiations and transfer processes will quickly grow out of control. Instead, having a single asset represents aggregate data, such as all parts, or a significant subset, such as a part type. Only one Contract Negotiation will be needed, and if the Transfer Process is non-finite and kept open, consumers can make multiple parts data requests (over the course of hours, days, months, etc.) without incurring additional overhead.

Flow Types

We’ll explain how to open a Transfer Process in the next section. First, it is important to understand the two modes for sending data from a provider to a consumer that EDC supports.

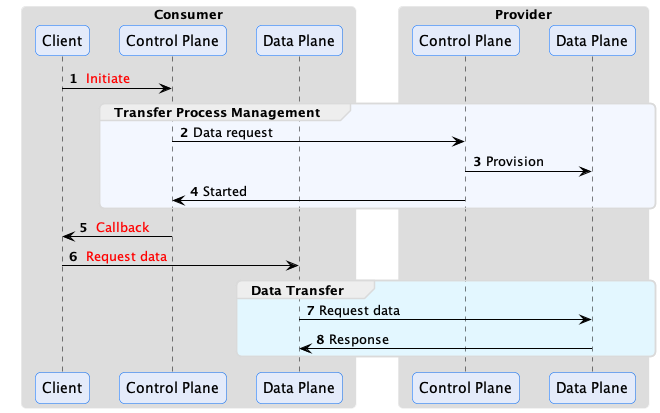

Consumer Pull

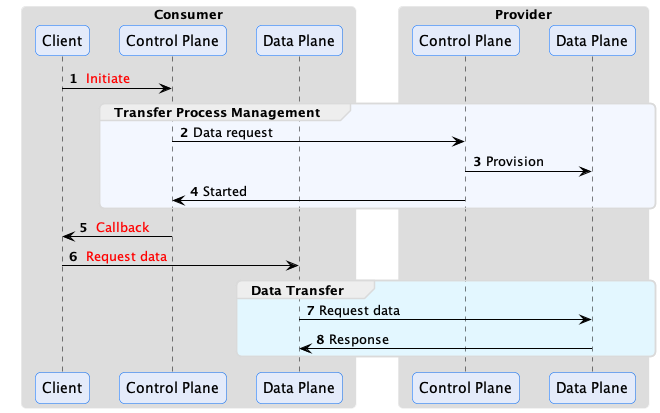

It requires the consumer to initiate the data flow operation. A common example of this is when a consumer makes an HTTP request to an endpoint and receives a response or pulls a message off a queue:

The EDR (Endpoint Data Reference) contains all the coordinates to reach the provider public endpoint where the data can be fetched. In the basic case, it is an HTTP endpoint, but it could be a message broker, an object storage and so on.

Provider Push

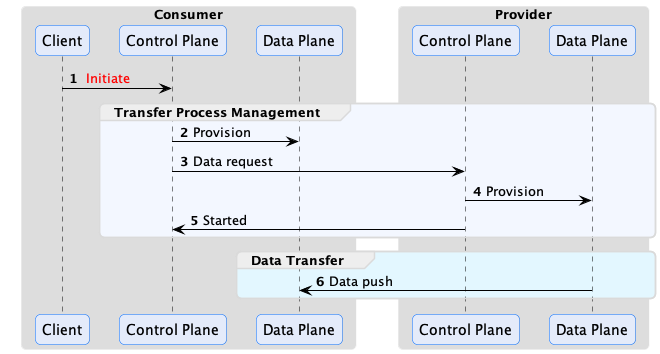

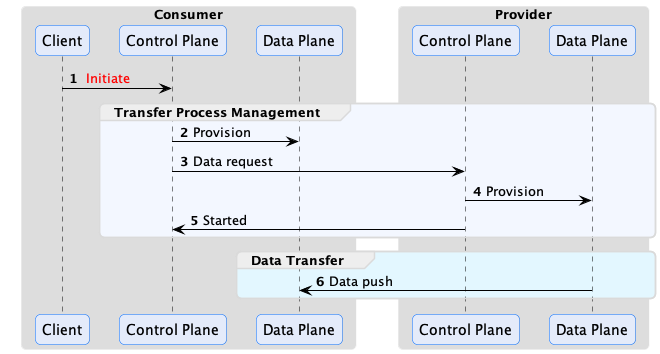

It requires the provider to actively push data to the consumer.

Note that, on the consumer side, the data plane role is to prepare the destination endpoint.

An example of provider push is when a consumer wishes to receive a dataset at an object storage endpoint that it controls.

Since the provider may need time to prepare the data, the consumer sends an access token when initiating a Transfer Process. The provider uses this token to push the data once it is ready.

Transfer Process States

Now that we have covered how transfer processes work at a high level, let’s look at the specifics. A transfer process is a shared state machine between the consumer and provider control planes. A transfer process will transition between states in response to a message received from the counterparty or as the result of a Management API operation. For example, a consumer will create a transfer process request via its Management API and send a request message to the provider. If the provider acknowledges the request with an OK, the transfer process state machine will be set to the REQUESTED state on both the consumer and provider. When the provider control plane is ready, it will send a message to the consumer, and the state machine will be transitioned to STARTED on both control planes.

The following are the most important transfer process states:

- REQUESTED - The consumer has requested a data transfer from the provider.

- STARTED - The consumer has received a start message from the provider. The data is available and can be pulled by the consumer or will be pushed by the provider.

- SUSPENDED - The consumer or provider has received a suspend message from the counterparty. All in-process data send operations will be paused.

- RESUMED - The consumer or provider has received a resume message from the counterparty. All in-process data send operations will be restarted.

- COMPLETED - The data transfer has been completed.

- TERMINATED - The consumer or provider has received a termination message from the counterparty. All in-process data send operations will be stopped.

There are a number of internal states that the consumer or provider can transition into without notifying the other party. The two most important are:

- PROVISIONED - When a data transfer request is made through the Management API on the consumer, its state machine will first transition to the PROVISIONED state to perform any required setup. After this is completed, the consumer control plane will dispatch a request to the provider and transition to the REQUESTED state. The state machine on the provider will transition to the PROVISIONED state after receiving a request and asynchronously completing any required data pre-processing.

- DEPROVISIONED - After a transfer has completed, the provider state machine will transition to the deprovisioned state to clean up any remaining resources.

As with the contract negotiation state machine, custom code can react to transition events using the EventRouter. There are also two further options for executing operations during the provisioning step on the consumer or provider. First, a Provisioner extension can be used to perform a task. EDC also includes the HttpProviderProvisioner, which invokes a configured HTTP endpoint when a provider control plane enters the provisioning step. The endpoint can front code that performs a task and asynchronously invoke a callback on the control plane when it is finished.

Policy Monitor

It may be desirable to conduct ongoing policy checks for non-finite transfer processes. Streaming data is a typical example where such checks may be needed. If a stream is active for a long duration (such as manufacturing data feed), the provider may want to check if the consumer is still a partner in good standing or has maintained an industry certification. The EDC PolicyMonitor can be embedded in the control plane or run in a standalone runtime to periodically check consumer credentials.

3.1 - Policy Engine

EDC includes a policy engine for evaluating policy expressions. It’s important to understand its design center, which

takes a code-first approach. Unlike other policy engines that use a declarative language, the EDC policy engine executes

code that is contributed as extensions called policy functions. If you are familiar with compiler design and visitors,

you will quickly understand how the policy engine works. Internally, policy expressed as ODRL is deserialized into a

POJO-based object tree (similar to an AST) and walked by the policy engine.

Let’s take one of the previous policy examples:

{

"@context": {

"edc": "https://w3id.org/edc/v0.0.1/ns/"

},

"@type": "PolicyDefinition",

"policy": {

"@context": "http://www.w3.org/ns/odrl.jsonld",

"@id": "8c2ff88a-74bf-41dd-9b35-9587a3b95adf",

"duty": [

{

"target": "http://example.com/asset:12345",

"action": "use",

"constraint": {

"leftOperand": "headquarter_location",

"operator": "eq",

"rightOperand": "EU"

}

}

]

}

}

When the policy constraint is reached during evaluation, the policy engine will dispatch to a function registered under

the key header_location. Policy functions implement the AtomicConstraintRuleFunction interface:

@FunctionalInterface

public interface AtomicConstraintRuleFunction<R extends Rule, C extends PolicyContext> {

/**

* Performs the evaluation.

*

* @param operator the operation

* @param rightValue the right-side expression for the constraint

* @param rule the rule associated with the constraint

* @param context the policy context

*/

boolean evaluate(Operator operator, Object rightValue, R rule, C context);

}

A function that evaluates the previous policy will look like the following snippet:

public class TestPolicy implements AtomicConstraintRuleFunction<Duty, ParticipantAgentPolicyContext> {

public static final String HEADQUARTERS = "headquarters";

@Override

public boolean evaluate(Operator operator, Object rightValue, Duty rule, ParticipantAgentPolicyContext context) {

if (!(rightValue instanceof String headquarterLocation)) {

context.reportProblem("Right-value expected to be String but was " + rightValue.getClass());

return false;

}

var participantAgent = context.participantAgent();

var claim = participantAgent.getClaims().get(HEADQUARTERS);

if (claim == null) {

return false;

}

// ... evaluate claim and if the headquarters are in the EU, return true

return true;

}

}

Note that PolicyContext has its own hierarchy, that’s tightly bound to the policy scope.

Policy Scopes and Bindings

In EDC, policy rules are bound to a specific context termed a scope. EDC defines numerous scopes, such as one for

contract negotiations and provisioning of resources. To understand how scopes work, consider the following case,

“to access data, a consumer must be a business partner in good standing”:

{

"constraint": {

"leftOperand": "BusinessPartner",

"operator": "eq",

"rightOperand": "active"

}

}

In the above scenario, the provider EDC’s policy engine should verify a partner credential when a request is made to

initiate a contract negotiation. The business partner rule must be bound to the contract negotiation scope since

policy rules are only evaluated for each scope they are bound to. However, validating a business partner credential

may not be needed when data is provisioned if it has already been checked when starting a transfer process. To avoid an

unnecessary check, do not bind the business partner rule to the provision scope. This will result in the rule being

filtered and ignored during policy evaluation for that scope.

The relationship between scopes, rules, and functions is shown in the following diagram:

Rules are bound to scopes, and unbound rules are filtered when the policy engine evaluates a particular scope. Scopes

are bound to contexts, and functions are bound to rules for a particular scope/context. This means that separate

functions can be associated with the same rule in different scopes. Furthermore, both scopes and contexts are hierarchical

and denoted with a DOT notation. A rule bound to a parent context will be evaluated in child scopes.

Be careful when implementing policy functions, particularly those bound to the catalog request scope (request.catalog),

which may involve evaluating a large set of policies in the course of a synchronous request. Policy functions should be

efficient and avoid unnecessary remote communication. When a policy function makes a database call or invokes a

back-office system (e.g., for a security check), consider introducing a caching layer to improve performance if testing

indicates the function may be a bottleneck. This is less of a concern for policy scopes associated with asynchronous

requests where latency is generally not an issue.

In Force Policy

The InForce is an interoperable policy for specifying in force periods for contract agreements. An in force period can

be defined as a duration or a fixed date.

All dates must be expressed as UTC.

Duration

A duration is a period of time starting from an offset. EDC defines a simple expression language for specifying the

offset and duration in time units:

<offset> + <numeric value>ms|s|m|h|d

The following values are supported for <offset>:

| Value | Description |

|---|

| contractAgreement | The start of the contract agreement defined as the timestamp when the provider enters the AGREED state expressed in UTC epoch seconds |

The following values are supported for the time unit:

| Value | Description |

|---|

| ms | milliseconds |

| s | seconds |

| m | minutes |

| h | hours |

| d | days |

A duration is defined in a ContractDefinition using the following policy and left-hand

operands https://w3id.org/edc/v0.0.1/ns/inForceDate:

{

"@context": {

"cx": "https://w3id.org/cx/v0.8/",

"@vocab": "http://www.w3.org/ns/odrl.jsonld"

},

"@type": "Offer",

"@id": "a343fcbf-99fc-4ce8-8e9b-148c97605aab",

"permission": [

{

"action": "use",

"constraint": {

"and": [

{

"leftOperand": "https://w3id.org/edc/v0.0.1/ns/inForceDate",

"operator": "gt",

"rightOperand": {

"@value": "contractAgreement",

"@type": "https://w3id.org/edc/v0.0.1/ns/inForceDate:dateExpression"

}

},

{

"leftOperand": "https://w3id.org/edc/v0.0.1/ns/inForceDate:inForceDate",

"operator": "lt",

"rightOperand": {

"@value": "contractAgreement + 100d",

"@type": "https://w3id.org/edc/v0.0.1/ns/inForceDate:dateExpression"

}

}

]

}

}

]

}

Fixed Date

Fixed dates may also be specified as follows using https://w3id.org/edc/v0.0.1/ns/inForceDate operands:

{

"@context": {

"edc": "https://w3id.org/edc/v0.0.1/ns/inForceDate",

"@vocab": "http://www.w3.org/ns/odrl.jsonld"

},

"@type": "Offer",

"@id": "a343fcbf-99fc-4ce8-8e9b-148c97605aab",

"permission": [

{

"action": "use",

"constraint": {

"and": [

{

"leftOperand": "https://w3id.org/edc/v0.0.1/ns/inForceDate",

"operator": "gt",

"rightOperand": {

"@value": "2023-01-01T00:00:01Z",

"@type": "xsd:datetime"

}

},

{

"leftOperand": "https://w3id.org/edc/v0.0.1/ns/inForceDate",

"operator": "lt",

"rightOperand": {

"@value": "2024-01-01T00:00:01Z",

"@type": "xsd:datetime"

}

}

]

}

}

]

}

Although xsd:datatime supports specifying timezones, UTC should be used. It is an error to use an xsd:datetime

without specifying the timezone.

No Period

If no period is specified the contract agreement is interpreted as having an indefinite in force period and will remain

valid until its other constraints evaluate to false.

Not Before and Until

Not Before and Until semantics can be defined by specifying a single https://w3id.org/edc/v0.0.1/ns/inForceDate

fixed date constraint and an

appropriate operand. For example, the following policy

defines a contact is not in force before January 1, 2023:

{

"@context": {

"edc": "https://w3id.org/edc/v0.0.1/ns/",

"@vocab": "http://www.w3.org/ns/odrl.jsonld"

},

"@type": "Offer",

"@id": "a343fcbf-99fc-4ce8-8e9b-148c97605aab",

"permission": [

{

"action": "use",

"constraint": {

"leftOperand": "edc:inForceDate",

"operator": "gt",

"rightOperand": {

"@value": "2023-01-01T00:00:01Z",

"@type": "xsd:datetime"

}

}

}

]

}

Examples

Please note that the samples use the abbreviated prefix notation "edc:inForceDate" instead of the full namespace

"https://w3id.org/edc/v0.0.1/ns/inForceDate".

4 - Data Plane

Describes how the EDC integrates with off-the-shelf protocols such as HTTP, Kafka, cloud object storage, and other technologies to transfer data between parties.

A data plane is responsible for transmitting data using a wire protocol at the direction of the control plane. Data planes

can vary greatly, from a simple serverless function to a data streaming platform or an API that clients access. One

control plane may manage multiple data planes that specialize in the type of data sent or the wire protocol requested by

the data consumer. This section provides an overview of how data planes work and the role they play in a dataspace.

Separation of Concerns

Although a data plane can be collocated in the same process as a control plane, this is not a recommended setup. Typically,

a data plane component is deployed as a separate set of instances to an independent environment such as a Kubernetes cluster.

This allows the data plane to be operated and scaled independently from the control plane. At runtime, a data plane must

register with a control plane, which in turn directs the data plane using the Data Plane Signaling API. EDC does not

ship with an out-of-the-box data plane. Rather, it provides the Data Plane Framework (DPF), a platform for building

custom data planes. You can choose to start with the DPF or build your own data plane using your programming language

of choice. In either case, understanding the data plane registration process and Signaling API are the first steps.

Data Plane Registration

In the EDC model, control planes and data planes are dynamically associated. At startup, a data plane registers itself

with a control plane using its component ID. Registration is idempotent and persistent and made available to all

clustered control plane runtimes via persistent storage. After a data plane is registered, the control plane

periodically sends a heartbeat and culls the registration if the data plane is unavailable.

The data plane registration includes metadata about its capabilities, including:

- The supported wire protocols and supported transfer types. For example, “HTTP-based consumer pull” or “S3-based provider push”

- The supported data source types.

The control plane uses data plane metadata for two purposes. First, it is used to determine which data transfer types

are available for an asset when generating a catalog. Second, the metadata is used to select a data plane when a

transfer process is requested.

Data Plane Signaling

A control plane communicates with a data plane through a RESTful interface called the Data Plane Signaling API. Custom

data planes can be written that integrate with the EDC control plane by implementing the registration protocol and the

signaling API.

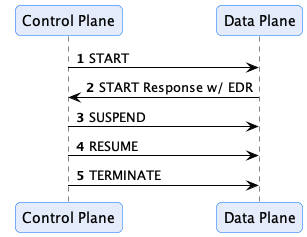

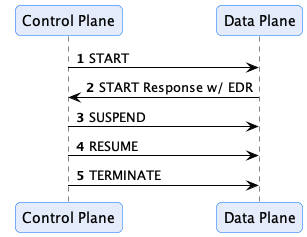

The Data Plane Signaling flow is shown below:

When a transfer process is started, and a data plane is selected, a start message will be sent. If the transfer process

is a consumer-pull type where data is accessed by the consumer, the response will contain an Endpoint Data Reference

(EDR) that contains the coordinates to the data and an access token if one is required. The control plane may send

additional signals, such as SUSPEND and RESUME, or TERMINATE, in response to events. For example, the control plane

policy monitor could send a SUSPEND or TERMINATE message if a policy violation is encountered.

The Data Plane Framework (DPF)

EDC includes a framework for building custom data planes called the DPF. DPF supports end-to-end streaming transfers

(i.e., data content is streamed rather than materialized in memory) for scalability and both pull- and push- style

transfers. The framework has extensibility points for supporting different data sources and sinks (e.g., S3, HTTP,

Kafka) and can perform direct streaming between different source and sink types.

The EDC samples contain examples of how to use and customize the DPF.

5 - Identity Hub

Identity Hub (IH) manages organization identity resources such as credentials for a dataspace participant. It is designed

for machine-to-machine interactions and does not manage personal verifiable credentials. Identity Hub implements the

Decentralized Claims Protocol (DCP) and is

based on key decentralized identity standards, including W3C DIDs, the

W3C did:web Method, and the

W3C Verifiable Credentials Data Model v1.1specifications, so we recommend

familiarizing yourself with those technologies first.

One question that frequently comes up is whether Identity Hub supports OpenID for Verifiable Credentials (OID4VC). The short answer is No. That’s because OID4VC mandates human (end-user) interactions, while Identity Hub is designed for machine-to-machine interactions where humans are not in the loop. Identity Hub is built on many of the same decentralized identity standards as OID4VC but implements DCP, a protocol specifically designed for non-human flows.

Identity Hub securely stores and manages W3C Verifiable Credentials, including handling presentation and issuance. But Identity Hub is more than an enterprise “wallet” since it handles key material and DID documents. Identity Hub manages the following identity resources:

- Verifiable Credentials. Receiving and managing issued credentials and generating Verifiable Presentations (VPs).

- Key Pairs. Generating, rotating, and revoking signing keys.

- DID Documents. Generating and publishing DID documents.

The EDC MVD Project provides a full test dataspace setup with Identity Hub. It’s an excellent tool to experiment with Identity Hub and decentralized identity technologies.

As we will see, Identity Hub can be deployed to diverse topologies, from embedded in a small footprint edge connector to an organization-wide clustered system. Before getting into these details, let’s review the role of Identity Hub.

Identities and Credentials in a Dataspace: The Role of Identity Hub

Note this section assumes a solid understanding of security protocols, DIDs, verifiable credentials, and modern cryptography concepts.

Identity Hub is built on the Decentralized Claims Protocol (DCP). This protocol overlays the Dataspace Protocol (DSP) by adding security and trust based on a decentralized identity model. To see how a decentralized identity system works, we will contrast it with a centralized approach.

Protocols such as traditional OAuth2 grants adopt a centralized model where a single identity provider or set of federated providers issue tokens on behalf of a party. Data consumers request a token from an identity provider, which, in turn, generates and signs one along with a set of claims. The data consumer passes the signed token to the data provider, which verifies the token using public key material from the identity provider:

The centralized model is problematic for many dataspaces:

- It is prone to network outages. If the identity provider goes down, the entire dataspace is rendered inoperable. Using federated providers only partially mitigates this risk while increasing complexity since large sections of a dataspace will still be subject to outage.

- It does not preserve privacy. Since an identity provider issues and verifies tokens, it is privy to communications between data consumers and providers. While the provider may not know the content of the communications, it is aware of who is communicating with whom.

- Participants are not in control of their identity and credentials. The identity provider creates identity tokens and manages credentials, not the actual dataspace participants.

Identity Hub and the Decentralized Claims Protocol are designed to address these limitations by introducing a model where there is no single point of failure, privacy is maintained, and dataspace participants control their identities and credentials. This approach is termed decentralized identity and builds on foundational standards from the W3C and Decentralized Identity Foundation.

The Presentation Flow

To understand the role of Identity Hub in a dataspace that uses a decentralized identity system, let’s start with a basic example. A consumer wants to access data from a provider that requires proof the consumer is certified by a third-party auditor. The certification proof is a W3C Verifiable Credential issued by the auditor. For now, we’ll assume the consumer’s Identity Hub already manages the VC (issuance will be described later).

When the consumer’s control plane makes a contract negotiation request to the provider, it must include a declaration of which participant it is associated with (the participant ID) and a way for the provider to access the required certification VC. From the provider’s perspective, it needs a mechanism to verify the consumer control plane is operating on behalf of the participant and that the VC is valid. Once this is done, the provider can trust the consumer control plane and grant it access to the data by issuing a contract agreement.

Instead of obtaining a token from a third-party identity provider, DCP mandates self-issued tokens. Self-issued tokens are generated and signed by the requesting party, which in the current example is the data consumer. As we will see, these self-issued tokens identify the data consumer and include a way for the provider to resolve the consumer’s credentials. This solves the issues of centralized identity systems highlighted above. By removing the central identity provider, DCP mitigates the risk of a network outage. Privacy is preserved since all communication is between the data consumer and the data provider. Finally, dataspace members remain in control of their identities and credentials.

Let’s look at how this works in practice. Identity and claims are transmitted as part of the transport header in DSP messages. The HTTP bindings for DSP do this using an Authorization token. DCP further specifies the header contents to be a self-signed JWT. The JWT sub claim contains the sender’s Web DID, and the JWT is signed with a public key contained in the associated DID document (as a verification method). The data provider verifies the sending control plane’s identity by resolving the DID document and checking the signed JWT against the public key.

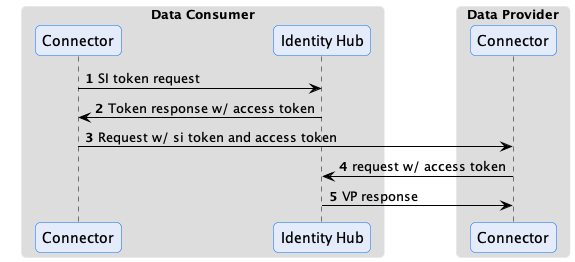

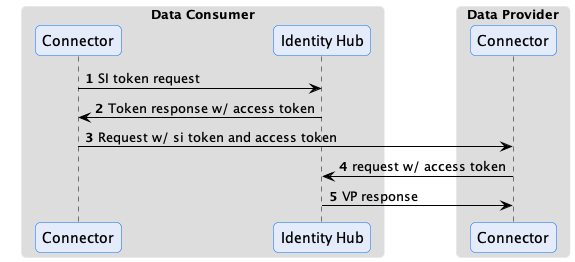

This step only proves that the requesting control plane is operating on behalf of a participant. However, the control plane cannot yet be trusted since it must present the VC issued by the third-party auditor. DCP also specifies the JWT contains an access token in the token claim. The data provider uses the access token to query the data consumer’s Identity Hub for a Verifiable Presentation with one or more required credentials. It obtains the endpoint of the consumer’s Identity Hub from a service entry of type CredentialService in the resolved DID document. At that point, the provider connector can query the Identity Hub using the access token to obtain a Verifiable Presentation containing the required VC:

Once the VP is obtained, the provider can verify the VC to establish trust with the consumer control plane.

Why not just include the VP in the token or another HTTP header and avoid the call to Identity Hub? There’s a practical reason: VPs often exceed the header size limit imposed by HTTP infrastructure such as proxies. DSP and DCP could have devised the concept of a message envelope (remember WS-* and SOAP?) but chose not to because it ties credentials to outbound client requests. To see why this is limiting, consider the scenario where a consumer requests access to an ongoing data stream. The provider control plane may set up a policy monitor to periodically check the consumer’s credentials while the stream is active. In the DCP model, the policy monitor can query the consumer’s Identity Hub using the mechanism we described without the flow being initiated by the consumer.

Verifiable Presentation Generation

When the data provider issues a presentation request, the consumer Identity Hub generates a Verifiable Presentation based on the query received in the request. DSP defines two ways to specify a query: using a list of string-based scopes or a DIF Presentation Exchange presentation definition. Identity Hub does not yet support DIF Presentation Exchange (this feature is in development), so scopes are currently the only supported mechanism for requesting a set of credentials be included.

The default setup for Identity Hub translates a scope string to a Verifiable Credential type. For example, the following presentation query includes the AuditCertificationCredential:

{

"@context": [

"https://w3id.org/tractusx-trust/v0.8",

"https://identity.foundation/presentation-exchange/submission/v1"

],

"@type": "PresentationQueryMessage",

"scope": ["AuditCertificationCredential"]

}

Identity Hub will process this as a request for the AuditCertificationCredential type. If the access token submitted along with the request permits the AuditCertificationCredential, Identity Hub will generate a Verifiable Presentation containing the AuditCertificationCredential. The generated VP will contain multiple credentials if more than one scope is present.

The default scope mapping behavior can be overridden by creating a custom extension that provides an implementation of the ScopeToCriterionTransformer interface.

Two VP formats are supported: JWT-based and Linked-Data Proof. The JWT-based format is the default and recommended format because, in testing, it exhibited an order of magnitude better performance than the Linked-Data Proof format. It’s possible to override the default JWT format by either implementing VerifiablePresentationService or providing a configuration of VerifiablePresentationServiceImpl.

When DIF Present Exchange is supported, client requests will be able to specify the presentation format to generate.

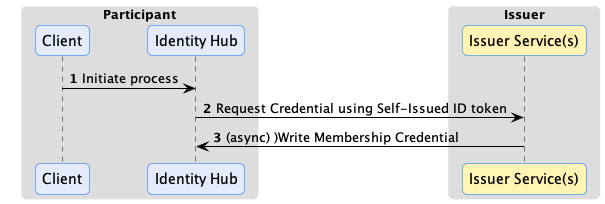

Issuance Flow

Note: Identity Hub issuance support is currently a work in progress.

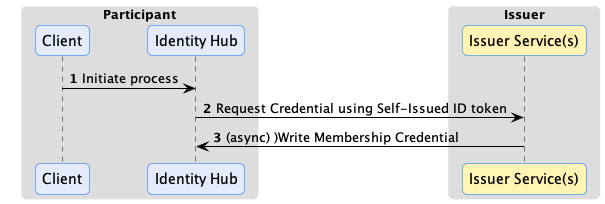

W3C Verifiable Credentials enable a holder to present claims directly to another party without the involvement or knowledge of the credential issuer. This is essential to preserve privacy and mitigate against network outages in a dataspace. DCP defines the way Identity Hub obtains credentials from an issuer. In DCP, issuance is an asynchronous process. The Identity Hub sends a request to the issuer endpoint, including a self-signed identity token. Similar to the presentation flow described above, the identity token contains an access token the issuer can use to send the VC to the requester’s Identity Hub. This is done asynchronously. The VC could be issued immediately or after an approval process:

Issuance can use the same claims verification as the presentation flow. For example, the auditor issuer in the previous example may require the presentation of a dataspace membership credential issued by another organization. In this case, the issuer would use the access token sent in the outbound request to query for the required credential from the Identity Hub before issuing its VC.

Using the Identity Hub

Identity Hub is built using the EDC modularity and extensibility system. It relies on core EDC features, including

cryptographic primitives, Json-Ld processing, and DID resolution. This architecture affords a great deal of deployment flexibility. Let’s break down the different supported deployment scenarios.

Organizational Component

Many organizations prefer to manage identity resources centrally, as strict security and control can be enforced over these sensitive resources. Identity Hub can be deployed as a centrally managed component in an organization that other EDC components use. In this scenario, Identity Hub will manage all identity resources for an organization for all dataspaces it participates in. For example, If an organization is a member of two dataspaces, DS1 and DS2, that issue membership credentials, both credentials will be managed by the central deployment. Connectors deployed for DS1 and DS2 will use their respective membership credentials from the central Identity Hub.

Per Dataspace Component

Some organizations may prefer to manage their identity resources at the dataspace level. For example, a multinational may participate in multiple regional dataspaces. Each dataspace may be geographically restricted, requiring all data and resources to be regionally fenced. In this case, an Identity Hub can deployed for each regional dataspace, allowing for separate management and isolation.

Embedded

Identity Hub is designed to scale down for edge-style deployments where footprint and latency are primary concerns. In these scenarios, Identity Hub can be deployed embedded in the same runtime process as other connector components, providing a simple, fast, and efficient deployment unit.

Identity Hub APIs and Resources

Identity Hub supports two main APIs: the Identity API for managing resources and the DCP API, which implements the wire protocol defined by the Decentralized Claims Protocol Specification. End-users generally do not interact with the DCP API, so we won’t cover it here. The Identity API is the primary way operators and third-party applications interact with the Identity Hub. Since the API provides access to highly sensitive resources, it’s essential to secure it. Above all, the API should never be exposed over a public network such as the Internet.

The best way to understand the Identity API is to start with the resources it is designed to manage. This will give you a solid grounding for reviewing the OpenAPI documentation and using its RESTful interface. It’s also important to note that since the Identity Hub is extensible, additional resource types may be added by third parties to enable custom use cases.

The Participant Context

The Identity API includes CRUD operations for managing participant contexts. This API requires elevated administrative privileges.

A participant context is a unit of control for resources in Identity Hub. A participant context is tied to a dataspace participant identity. Most of the time, an organization will have a single identity and use the same Web DID in multiple dataspaces. Its Identity Hub, therefore, will be configured with exactly one participant context to manage identity and credential resources.

If an organization uses different identities in multiple dataspaces, its Identity Hub will contain one participant context per identity. All resources are contained and accessed through a participant context. The participant context acts as both a scope and security boundary. Access control for public client API endpoints is scoped to a specific participant context. For example, the JWT access token sent to data providers described above is associated with a specific context and may not be used to access resources in another context. Furthermore, the lifecycle of participant resources is bound to their containing context; if a participant context is removed, the operation will cascade to all contained resources.

A participant context can be in one of three states:

CREATED - The participant context is initialized but not operational. Resources may be added and updated, but they are not publicly accessible.ACTIVATED - The participant context is operational, and resources are publicly accessible.DEACTIVATED - The participant context is not operational. Resources may be added and updated, but they are not publicly accessible.

The participant context can transition from CREATED to ACTIVATED and between the ACTIVATED and DEACTIVATED states.

It’s useful to note that Identity Hub relies on the core EDC eventing system to enable custom extensions. Services may register to receive participant context events, for example, when a context is created or deleted, to implement custom workflows.

DID Documents

When a participant context is created, it is associated with a DID. After a participant context is activated, a corresponding DID document will be generated and published. Currently, Identity Hub only supports Web DIDs, so publishing the document will make it available at the URL specified by the DID. Identity Hub can support other DID methods through custom extensions.

In addition, custom publishers can be created by implementing the DidDocumentPublisher interface and adding it via an extension to the Identity Hub runtime. For example, a publisher could deploy Web DID documents to a web server. Identity Hub includes an extension for locally publishing Web DID documents. The extension serves Web DID documents using a public API registered under the /did path. Note that this extension is not designed to handle high-volume requests, as DID documents are served directly from storage and are not cached. For these scenarios, publishing to a web server is recommended.

Key Pair Resources

Key pair resources are used to sign and verify credentials, presentations, and other resources managed by Identity Hub. The public and private keys associated with a key pair resource can be generated by Identity Hub or provided when the resource is created. Identity Hub persists all private keys in a secure store and supports using Hashicorp Vault as the store.

A Key pair resource can be in one of the following states:

CREATEDACTIVATEDROTATEDREVOKED

Let’s walk through these lifecycle states.

Key Activation

When a key pair is created, it is not yet used to sign resources. When a key pair is activated, Identity Hub makes the public key material available as a verification method in the participant context’s DID document so that other parties can verify resources such as verifiable presentations signed by the private key. This is done by publishing an updated DID document for the participant context during the activation step.

Key Rotation

For security reasons, key pair resources should be periodically rotated and replaced by new ones. Identity Hub supports a staged rotation process to avoid service disruptions and ensure that existing signed resources can still be validated for a specified period.

For example, let’s assume private key A is used to sign Credential CA and public key A’ is used to verify CA. If the key pair A-A’ is immediately revoked, CA can no longer be validated, which may cause a service disruption. Key rotation can be used to avoid this. When the key pair A-A’ is rotated, a new key pair, B-B’, is created and used to sign resources. The private key A is immediately destroyed. A’, however, will remain as a verification method in the DID document associated with the participant context. CA validation will continue to work. When CA and all other resources signed by A expire, A’ can safely be removed from the DID document.

It’s important to perform key rotation periodically to enhance overall system security. This implies that signed resources should have a validity period less than the rotation period of the key used to sign them and should also be reissued on a frequent basis.

Key Revocation

If a private key is compromised, it must be immediately revoked. Revocation involves removing the verification method entry in the DID document and publishing the updated version. This will invalidate all resources signed with the revoked key pair.

Verifiable Credentials

Support for storing verifiable credentials using the DCP issuance flow is currently in development. In the meantime, adopters must develop custom extensions for storing verifiable credential resources or create them through the Identity API.

Resource Operations

Identity Hub implements transactional guarantees when resource operations are performed through the Identity API. The purpose of transactional behavior is to ensure the Identity Hub maintains a consistent state. This section catalogs those operations and guarantees.

Participant Context Operations

Create

When a participant context is created, the following sequence is performed:

- A transaction is opened.

- An API key is generated to access the context via the Identity API

- A DID document is created and added to storage.

- A default key pair is created and added to storage.

- The DID document is published if the participant context is set to active when created.

- The transaction commits on success, or a rollback is performed.

Delete

When a participant context is deleted, the following sequence is performed:

- A transaction is opened.

- The DID document is unpublished if the resource is in the

PUBLISHED state. - The DID document resource is removed from storage.

- All associated key pair resources are removed from storage except for private keys.

- The participant context is removed from storage.

- The transaction commits on success, or a rollback is performed.

- All private keys associated with the context are removed after the transaction is committed since Vaults are not transactional resources.

If destroying private keys fails, manual intervention will be required to clean them up. Note that the IH will be in a consistent state.

Activate

A participant context cannot be activated without a default key pair.

When a participant context is activated, the following sequence is performed:

- A transaction is opened.

- The context is updated in storage.

- The DID document is published.

- The transaction commits on success, or a rollback is performed.

Deactivate

When a participant context is deactivated, the following sequence is performed:

- A transaction is opened.

- The context is updated in storage.

- The DID document is unpublished.

- The transaction commits on success, or a rollback is performed.

There is a force option that will commit the transaction if the DID document unpublish operation is not successful.

Key Pair Operations

Activate

This operation can only be performed when the participant context is in the CREATED or ACTIVATED state.

When a key pair is activated, the following sequence is performed:

- A transaction is opened.

- The new key pair is added to storage.

- If the DID document resource is in the

PUBLISHED state, the DID document is published with all verification methods for public keys in the ACTIVATED state. - The transaction commits on success, or a rollback is performed.

If the transaction commit fails, the DID document must be manually repaired. This can be done by republishing the DID document.

Rotate