Contributors Manual

0. Intended audience

This document is aimed at software developers who have already read the adopter documentation and

want to contribute code to the Eclipse Dataspace Components project.

Its purpose is to explain in greater detail the core concepts of EDC. After reading through it, readers should have a

good understanding of EDCs inner workings, implementation details and some of the advanced concepts.

So if you are a solution architect looking for a high-level description on how to integrate EDC, or a software engineer

who wants to use EDC in their project, then this guide is not for you. More suitable resources can be found

here and here respectively.

1. Getting started

1.1 Prerequisites

This document presumes a good understanding and proficiency in the following technical areas:

- JSON and JSON-LD

- HTTP/REST

- relational databases (PostgreSQL) and transaction management

- git and git workflows

Further, the following tools are required:

- Java Development Kit 17+

- Gradle 8+

- a POSIX compliant shell (bash, zsh,…)

- a text editor

- CLI tools like

curl and git

This guide will use CLI tools as common denominator, but in many cases graphical alternatives exist (e.g. Postman,

Insomnia, some database client, etc.), and most developers will likely use IDEs like IntelliJ or VSCode. We are of

course aware of them and absolutely recommend their use, but we simply cannot cover and explain every possible

combination of OS, tool and tool version.

Note that Windows is not a supported OS at the moment. If Windows is a must, we recommend using WSL2 or a setting up a

Linux VM.

1.2 Terminology

- runtime: a Java process executing code written in the EDC programming model (e.g. a control plane)

- distribution: a specific combination of modules, compiled into a runnable form, e.g. a fat JAR file, a Docker image

etc.

- launcher: a runnable Java module, that pulls in other modules to form a distribution. “Launcher” and “distribution”

are sometimes used synonymously

- connector: a control plane runtime and 1…N data plane runtimes. Sometimes used interchangeably with “distribution”.

- consumer: a dataspace participant who wants to ingest data under the access rules imposed by the provider

- provider: a dataspace participant who offers data assets under a set of access rules

1.3 Architectural and coding principles

When EDC was originally created, there were a few fundamental architectural principles around which we designed and

implemented all dataspace components. These include:

- asynchrony: all external mutations of internal data structures happen in an asynchronous fashion. While the REST

requests to trigger the mutations may still be synchronous, the actual state changes happen in an asynchronous and

persistent way. For example starting a contract negotiation through the API will only return the negotiation’s ID, and

the control plane will cyclically advance the negotiation’s state.

- single-thread processing: the control plane is designed around a set of sequential state

machines, that employ pessimistic locking to guard

against race conditions and other problems.

- idempotency: requests, that do not trigger a mutation, are idempotent. The same is true when provisioning external

resources.

- error-tolerance: the design goal of the control plane was to favor correctness and reliability over (low) latency.

That means, even if a communication partner may not be reachable due to a transient error, it is designed to cope with

that error and attempt to overcome it.

Prospective contributors to the Eclipse Dataspace Components are well-advised to follow these principles and build their

applications around them.

There are other, less technical principles of EDC such as simplicity and self-contained-ness. We are extremely careful

when adding third-party libraries or technologies to maintain a simple, fast and un-opinionated platform.

Take a look at our coding principles and our

styleguide.

2. The control plane

Simply put, the control plane is the brains of a connector. Its tasks include handling protocol and API requests,

managing various internal asynchronous processes, validating policies, performing participant authentication and

delegating the data transfer to a data plane. Its job is to handle (almost) all business logic. For that, it is designed

to favor reliability over low latency. It does not directly transfer data from source to destination.

The primary way to interact with a connector’s control plane is through the Management API, all relevant Java modules

are located at extensions/control-plane/api/management-api.

2.1 Entities

Detailed documentation about entities can be found here

2.2 Policy Monitor

The policy monitor is a component that watches over on-going transfers and ensures that the policies associated with the

transfer are still valid.

Detailed documentation about the policy monitor can be found here

2.3 Protocol extensions (DSP)

This chapter describes how EDC abstracts the interaction between connectors in a Dataspace through protocol extensions

and introduces the current default implementation which follows the Dataspace

protocol specification.

Detailed documentation about protocol extensions can be found here

3. (Postgre-)SQL persistence

PostgreSQL is a very popular open-source database and it has a large community and vendor adoption. It is also EDCs data

persistence technology of choice.

Every store in the EDC, intended to persist state, comes out of

the box with two implementations:

- in-memory

- sql (PostgreSQL dialect)

By default, the in-memory stores are provided by the dependency

injection, the SQL variants can be used by simply adding the relevant extensions (e.g. asset-index-sql,

contract-negotiation-store-sql, …) to the classpath.

Detailed documentation about EDCs PostgreSQL implementations can be found here

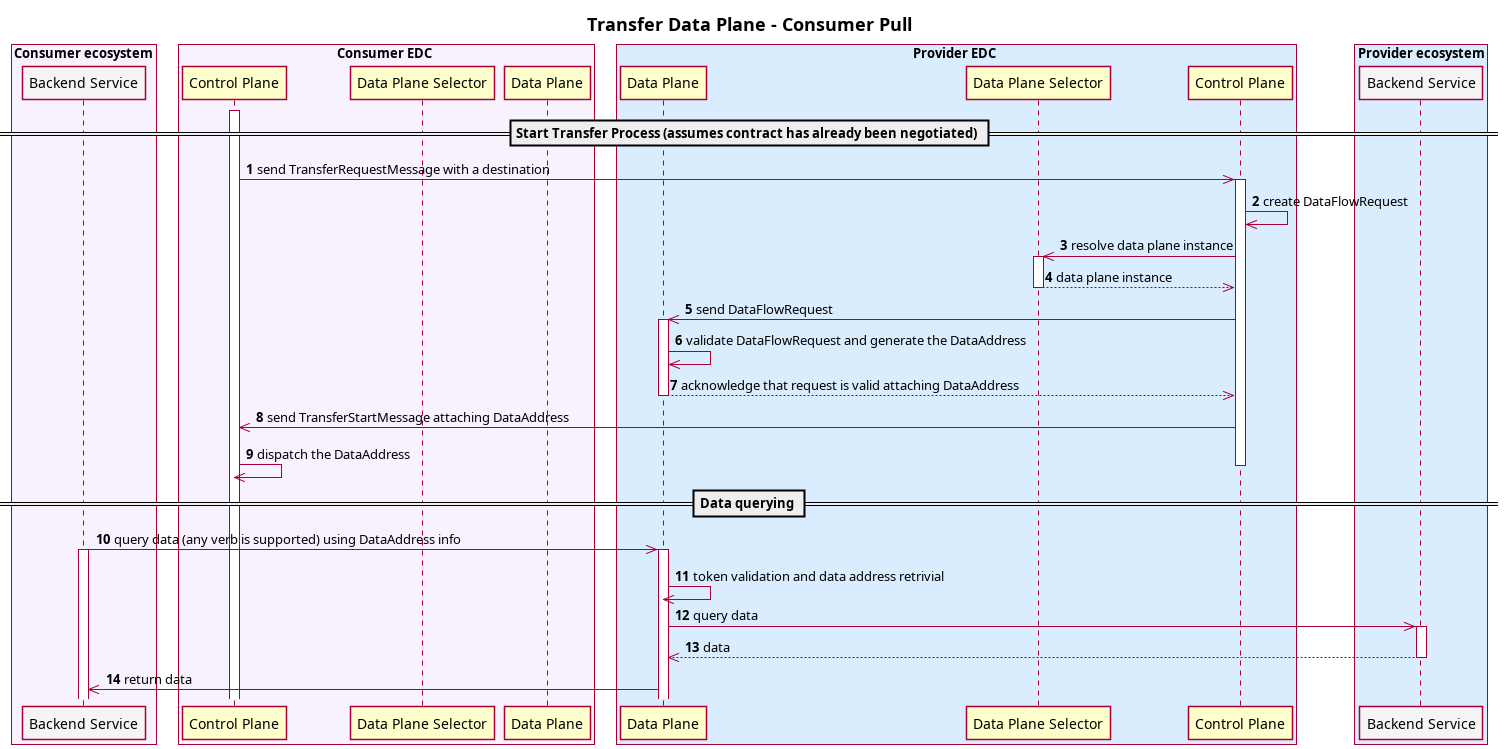

4. The data plane

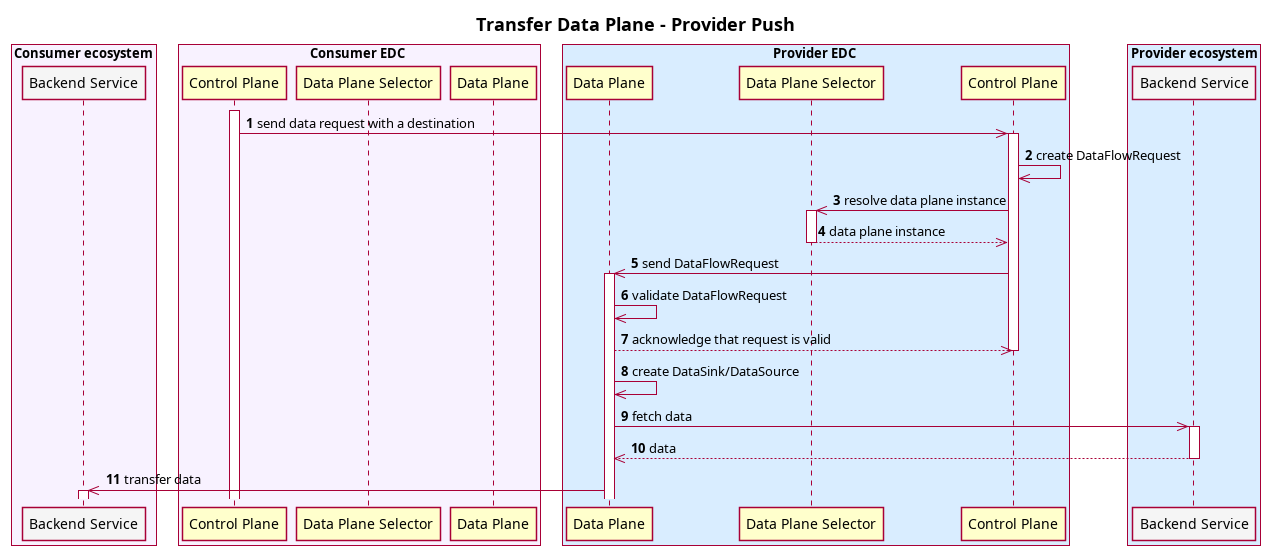

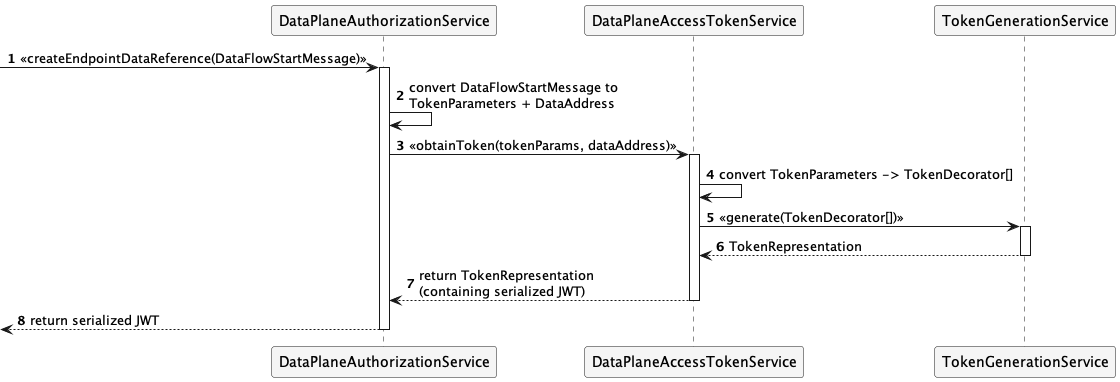

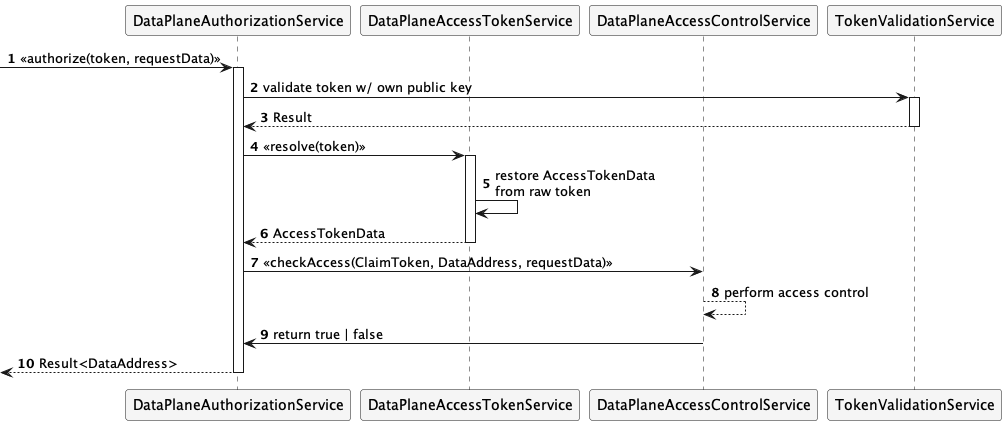

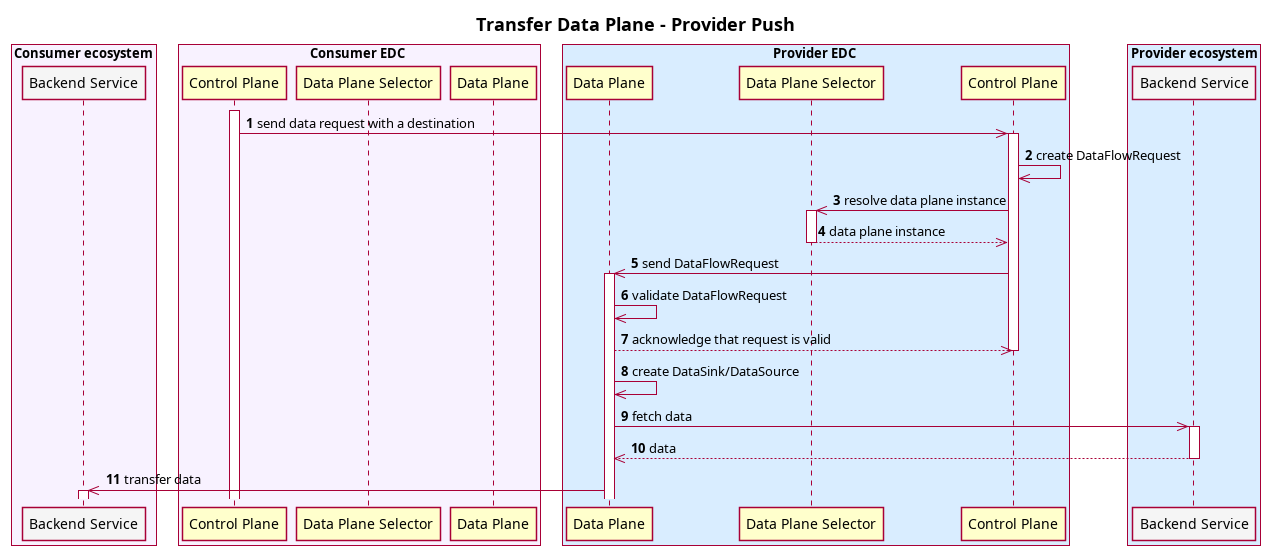

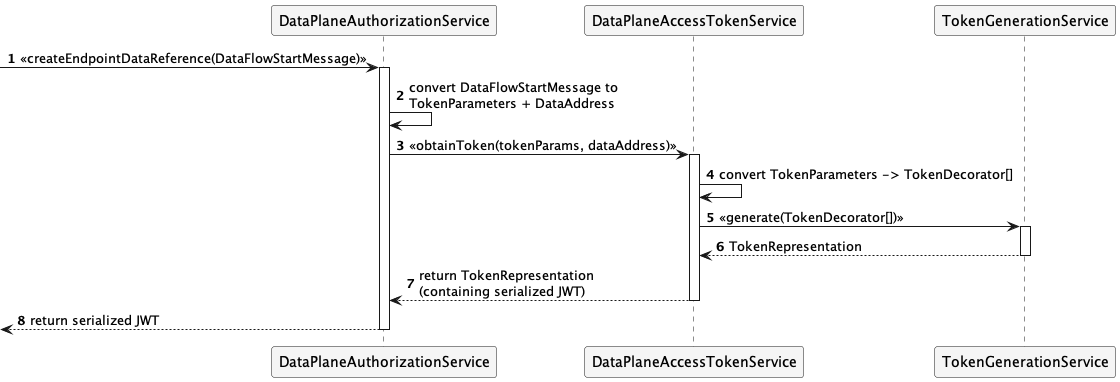

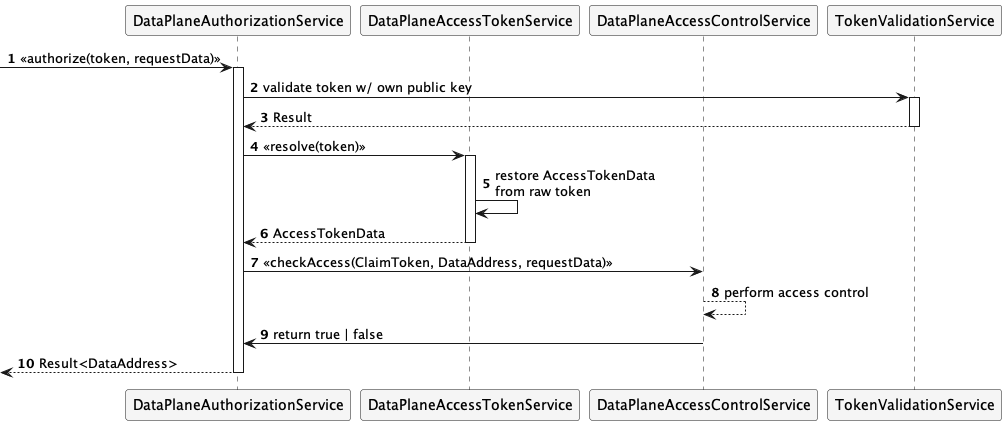

4.1 Data plane signaling

Data Plane Signaling (DPS) is the communication protocol that is used between control planes and data planes. Detailed

information about it and other topics such as data plane self-registration and public API authentication can be found

here.

4.2 Writing a custom data plane extension (sink/source)

The EDC Data Plane is build on top of the Data Plane Framework (DPF), which can be used for building custom data planes.

The framework has extensibility points for supporting different data sources and sinks (e.g., S3, HTTP, Kafka) and can

perform direct streaming between different source and sink types.

Detailed documentation about writing a custom data plane extension can be found here.

4.3 Writing a custom data plane (using only DPS)

Since the communication between control plane and data plane is well-defined in the DPS protocol, it’s possible

to write a data plane from scratch (without using EDC and DPF) and make it work with the EDC control plane.

Detailed documentation about writing a custom data plane be found here.

5. Runtime

5.1 Serialization via JSON-LD

JSON-LD is a JSON-based format for serializing Linked Data, and allows adding

specific “context” to the data expressed as JSON format.

It is a W3C standard since 2010.

Detailed information about how JSON-LD is used in EDC can be found here

5.2 Programming Primitives

This chapter describes the fundamental architectural and programming paradigms that are used in EDC. Typically, they

are not related to one single extension or feature area, they are of overarching character.

Detailed documentation about programming primitives can be found here

5.3 Extension model

One of the principles EDC is built around is extensibility. This means that by simply putting a Java module on the

classpath, the code in it will be used to enrich and influence the runtime behaviour of EDC. For instance, contributing

additional data persistence implementations can be achieved this way. This is sometimes also referred to as “plugin”.

Detailed documentation about the EDC extension model can be found here

5.4 Dependency injection deep dive

In EDC, dependency injection is available to inject services into extension classes (implementors of the

ServiceExtension interface). The ServiceExtensionContext acts as service registry, and since it’s not quite an IoC

container, we’ll refer to it simple as the “context” in this chapter.

Detailed documentation about the EDC dependency injection mechanism can be

found here

5.5 Service layers

Like many other applications and application frameworks, EDC is built upon a vertically oriented set of different layers

that we call “service layers”.

Detailed documentation about the EDC service layers can be found here

6. Development best practices

6.1 Writing Unit-, Component-, Integration-, Api-, EndToEnd-Tests

test pyramid… Like any other project, EDC has established a set of recommendations and rules that contributors must

adhere to in order to guarantee a smooth collaboration with the project. Note that familiarity with our formal

contribution guidelines is assumed. There additional recommendations we have compiled that

are relevant when deploying and administering EDC instances.

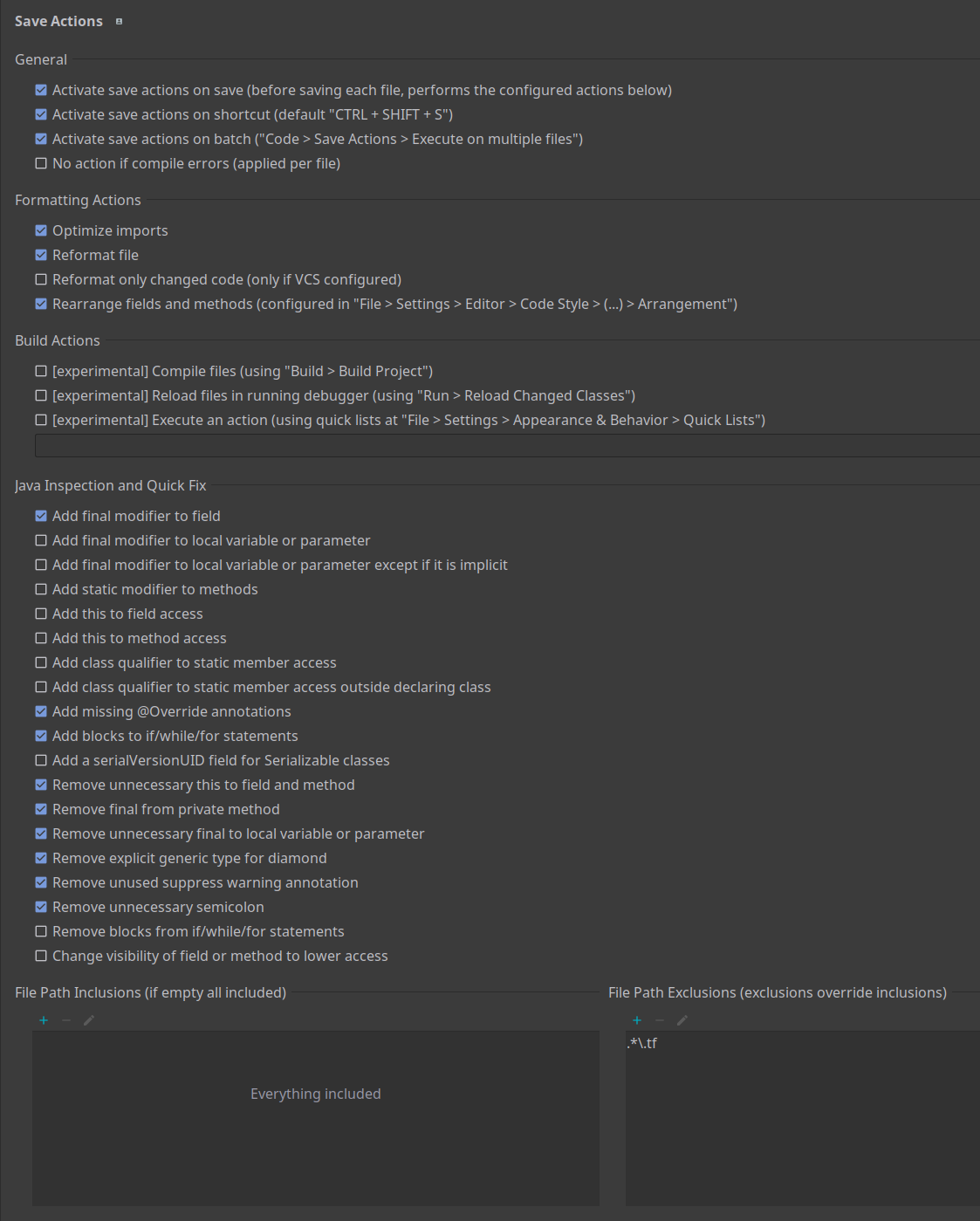

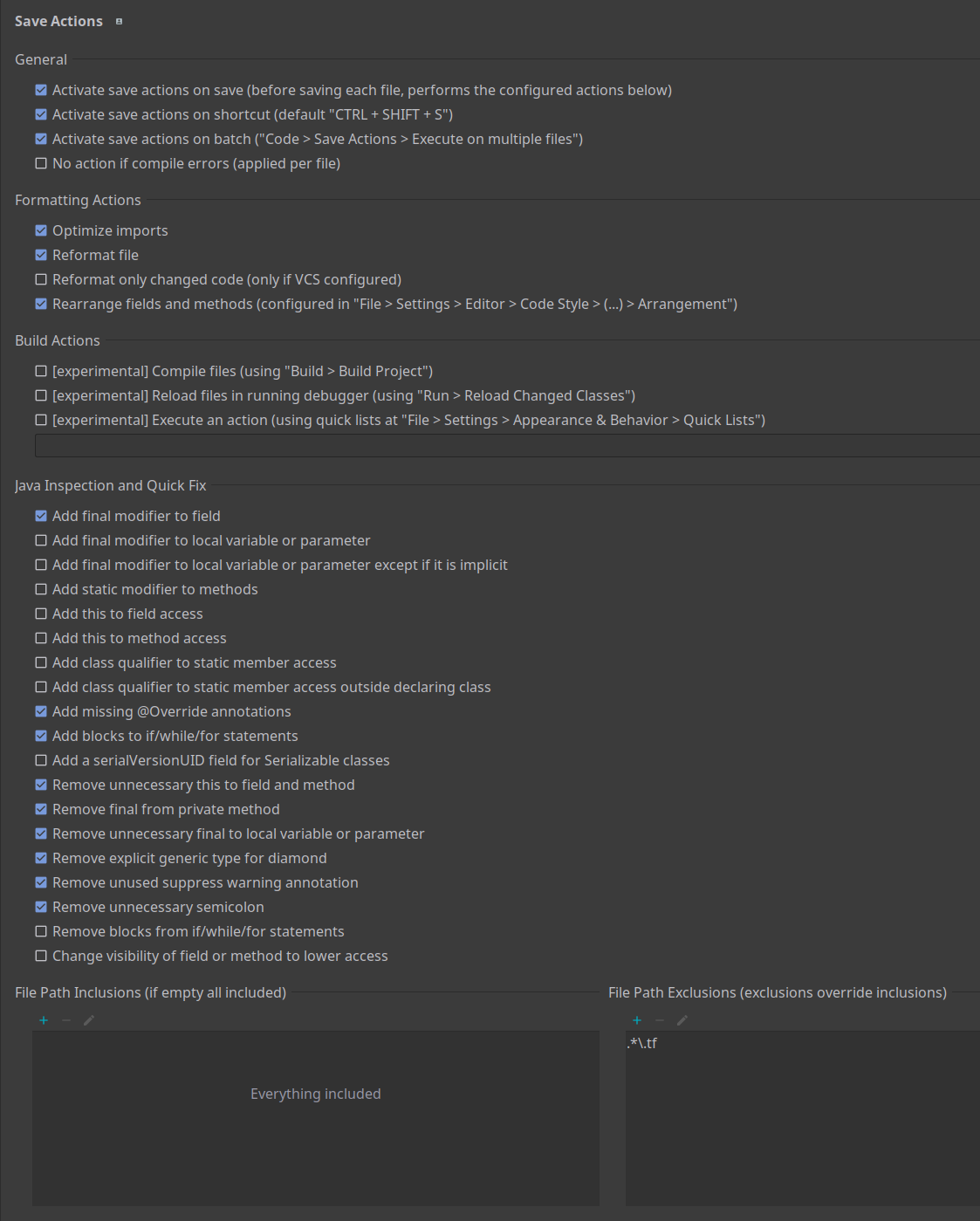

6.1 Coding best practices

Code should be written to conform with the EDC style guide.

A frequent subject of critique in pull requests is logging. Spurious and very verbose log lines like “Entering/Leaving

method X” or “Performing action Z” should be avoided because they pollute the log output and don’t contribute any value.

Please find detailed information about logging here.

6.2 Testing best practices

Every class in the EDC code base should have a test class that verifies the correct functionality of the code.

Detailed information about testing can be found here.

6.3 Other best practices

Please find general best practices and recommendations here.

1 - Best practices and recommendations

1. Preface

This document aims at giving guidelines and recommendations to developers who want to use or extend EDC or EDC modules

in their applications, to DevOps engineers who are tasked with packaging and operating EDC modules as runnable

application images.

Please understand this document as a recommendation from the EDC project committers team that they compiled to the best

of their knowledge. We realize that use case scenarios are plentiful and requirements vary, and not every best practice

is applicable everywhere. You know your use case best.

This document is not an exhaustive list of prescribed steps, that will shield adopters from any conceivable harm or

danger, but rather should serve as starting point for engineers to build upon.

Finally, it should go without saying that the software of the EDC project is distributed “as is” and committers of EDC

take no responsibility or liability, direct or indirect, for any harm or damage caused by the us`e of it. This document

does not change that.

2. Security recommendations

2.1 Exposing APIs to the internet

The EDC code base has several outward-facing APIs, exclusively implemented as HTTP/REST endpoints. These have different

purposes, different intended consumers and thus different security implications.

As a general rule, APIs should not be exposed directly to the internet. That does not mean that they shouldn’t be

accessible via the internet, obviously the connector and related components cannot work without a network connection.

This only means that API endpoints should not be directly facing the internet, instead, there should be appropriate

infrastructure in place.

It also means that we advise extreme caution when making APIs accessible via the internet - by default only the DSP

API and the data plane’s public API should be accessible via the internet, the others (management API, signaling

API,…) are intended only for local network access, e.g. within a Kubernetes cluster.

Corporate security policies might require that only HTTPS/TLS connections be used, even between pods in a Kubernetes

cluster. While the EDC project makes no argument pro or contra, that is certainly an idea worth considering in high

security environments.

The key take-away is that all of EDC’s APIs - if accessible outside the local network - should only be accessible

through separate software components such as API gateways or load balancers. These are specialized tools with the sole

purpose of performing authentication, authorization, rate limiting, IP blacklisting/whitelisting etc.

There is a plethora of ready-made components available, both commercial and open-source, therefor the EDC project will

not provide that functionality. Feature requests and issues to that effect will be ignored.

In the particular case of the DSP API, the same principle holds, although with the exception of authentication and

authorization. That is handled by the DSP protocol

itself.

We have a rudimentary token-based API security module available, which can be used to secure the connection API gateway

<-> connector if so desired. It should be noted that it is not designed to act as a ingress point!

TL;DR: don’t expose any APIs if you can help it, but if you must, use available tools to harden the ingress

2.2 Use only official TLS certificates/CAs

Typically, JVMs ship with trust stores that contain a number of widely accepted CAs. Any attempts to package additional

CAs/certificates with runtime base images are discouraged, as that would be problematic because:

- scalability: in a heterogenous networks one cannot assume such a custom CA to be accepted by the counterparty

- maintainability: TLS certificates expire, so there is a chance that mandatory software rollouts become necessary

because of expired certificates lest the network breaks down completely.

- security: there have been a number of issues with CAs

(1,

2), so adding non-sanctioned

ones brings a potential security weakness

2.3 Use appropriate network infrastructure

As discussed earlier, EDC does not (and will not) provide or implement tooling to harden network ingress, as that is

an orthogonal concern, and there are tools better suited for that.

We encourage every connector deployment to plan and design their network layout and infrastructure right from the onset,

before even writing code. Adding that later can be difficult and time-consuming.

For example, in Kubernetes deployments, which are the de-facto industry standard, networking can be taken on by ingress

controllers and load balancers. Additional external infrastructure, such as API gateways are recommended to handle

authentication, authorization and request throttling.

2.4 A word on authentication and authorization

EDC does not have a concept of a “user account” as many client-facing applications do. In terms of identity, the

connector itself represents a participant in a dataspace, so that is the level of granularity the connector operates on.

That means, that client-consumable APIs such as the Management API only have rudimentary security. This is by design and

must be solved out-of-band.

The reasoning behind this is that requirements for authentication and authorization are so diverse and heterogeneous,

that it is virtually impossible for the EDC project to satisfy them all, or even most of them. In addition, there is

very mature software available that is designed for this very use case.

Therefore, adopters of EDC have two options to consider:

- develop a custom

AuthenticationService (or even a ContainerRequestFilter), that integrates with an IDP - use a dedicated API gateway (recommended)

Both these options are viable, and may have merit depending on the use case.

2.5 Docker builds

As Docker is a very popular method to build and ship applications, we put forward the following recommendations:

- use official Eclipse Temurin base images for Java

- use dedicated non-root users: in your Dockerfile, add the following lines

ARG APP_USER=docker

ARG APP_UID=10100

RUN addgroup --system "$APP_USER"

RUN adduser \

shell /sbin/nologin \

disabled-password \

gecos "" \

ingroup "$APP_USER" \

no-create-home \

uid "$APP_UID" \

APP_USER"

USER "$APP_USER"

2.6 Use proper database security

Database connections are secured with a username and a password. Please choose non-default users and strong passwords.

In addition, database credentials should be stored in an HSM (vault).

Further, the roles of the technical user for the connector should be limited to SELECT, INSERT, UPDATE, and

DELETE. There is no reason for that user to have permissions to modify databases, tables, permissions or execute other

DDL statements.

2.7 Store sensitive data in a vault

While the default behaviour of EDC is that configuration values are taken either from environment variables, system

properties or from configuration extensions, it is highly recommended to store sensitive data in a vault when

developing EDC extensions.

Here is a (non-exhaustive) list of examples of such sensitive values:

- database credentials

- cryptographic keys, e.g. private keys in an asymmetric key pair

- symmetric keys

- API keys/tokens

- credentials for other third-party services, even if temporary

Sensitive values should not be passed through multiple layers of code. Instead, they should be referenced by their

alias, and be resolved from the vault wherever they are used. Do not store sensitive data as class members but use

local variables that are garbage-collected when leaving execution scope.

3. General recommendations

3.1 Use only official releases

We recommend using only official releases of our components. The latest version can be obtained from the project’s

GitHub releases page and the modules are available from

MavenCentral.

Snapshots are less stable, less tested and less reliable than release versions and they make for non-repeatable builds.

That said, we realize that sometimes living on the bleeding edge of technology is thrilling, or in some circumstances

even necessary. EDC components publish a -SNAPSHOT build on every commit the main branch, so there could be several

such builds per day, each overwriting the previous one. In addition, we publish nightly builds, that are versioned

<VERSION>-<YYYYMMDD>-SNAPSHOT and those don’t get overwritten. For more information please refer to the respective

documentation.

3.2 Dependency hygiene

It should be at the top of every software engineer’s todo list to keep application dependencies current, to avoid

security issues, minimize technical debt and prevent difficult upgrade paths. We strongly recommend using a tool to keep

dependencies up-to-date, or at least notify when a new version is out.

This is especially true for EDC versions. Since the project has not yet reached a state of equilibrium, where we can

follow SemVer rules, major (potentially breaking) changes and incompatibilities are to be expected on every version

increment.

Internally we use dependabot to maintain our dependencies, as it

is well integrated with GitHub actions, but this is not an endorsement. Alternatives exist.

3.3 Use database persistence wherever possible

While the connector runtime provides in-memory persistence by default, it is recommended to use database persistence in

production scenarios, if possible. Hosting the persistence of several modules (e.g. AssetIndex and

PolicyDefinitionStore) in the same database is generally OK.

This is because although memory stores are fast and easy to use, they have certain drawbacks, for instance:

- clustered deployments: multiple replica don’t have the same data, thus they would operate on inconsistent data

- security: if an attacker is able to create a memdump of the pod, they gain access to all application data

- memory consumption: Kubernetes has no memory limits out-of-the-box, so depending on the amount of data that is stored

by a connector, this could cause runtime problems when databases start to grow, especially on resource constrained

deployments.

3.4 Use proper Vault implementations

Similar to the previous section, proper HSM (Vault) implementations should be used in all but the most basic test and

demo scenarios. Vaults are used to store the most sensitive information, and by

default EDC provides only an in-memory variant.

3.4 Use UUIDs as object identifiers

While we don’t enforce any particular shape or form for object identifiers, we recommend using UUIDs because they are

reasonably unique, reasonably compact, and reasonably available on most tech stacks. Use the JDK UUID

implementation. It’s good enough.

2 - Developer Tools

1. Introduction

We provide two Gradle plugins that could be used to simplify your build/documentation efforts.

The plugins are available on the GradlePlugins GitHub Repository:

2. edc-build

The plugin consists essentially of these things:

- a plugin class: extends

Plugin<Project> from the Gradle API to hook into the Gradle task infrastructure - extensions: they are POJOs that are model classes for configuration.

- conventions: individual mutations that are applied to the project. For example, we use conventions to add some

standard repositories to all projects, or to implement publishing to Snapshot Repository and MavenCentral in a generic way.

- tasks: executable Gradle tasks that perform a certain action like merging OpenAPI Specification documents.

It is important to note that a Gradle build is separated in phases, namely Initialization, Configuration and

Execution (see documentation). Some of our

conventions as well as other plugins have to be applied in the Configuration phase.

2.1. Usage

The plugin is published on the Gradle Plugin Portal, so

it can be added to your project in the standard way suggested in the [Gradle documentation](https://docs.gradle.org/current/userguide/plugins.html.

3. autodoc

This plugin provides an automated way to generate basic documentation about extensions, plug points, SPI modules and

configuration settings for every EDC extension module, which can then transformed into Markdown or HTML files, and

subsequently be rendered for publication in static web content.

To achieve this, simply annotate respective elements directly in Java code:

@Extension(value = "Some supercool extension", categories = {"category1", "category2"})

public class SomeSupercoolExtension implements ServiceExtension {

// default value -> not required

@Setting(value = "Some string config property", type = "string", defaultValue = "foobar", required = false)

public static final String SOME_STRING_CONFIG_PROPERTY = "edc.some.supercool.string";

//no default value -> required

@Setting(value = "Some numeric config", type = "integer", required = true)

public static final String SOME_INT_CONFIG_PROPERTY = "edc.some.supercool.int";

// ...

}

The autodoc plugin hooks into the Java compiler task (compileJava) and generates a module manifest file that

contains meta information about each module. For example, it exposes all required and provided dependencies of an EDC

ServiceExtension.

3.1. Usage

In order to use the autodoc plugin we must follow a few simple steps. All examples use the Kotlin DSL.

3.1.1. Add the plugin to the buildscript block of your build.gradle.kts:

buildscript {

repositories {

maven {

url = uri("https://oss.sonatype.org/content/repositories/snapshots/")

}

}

dependencies {

classpath("org.eclipse.edc.autodoc:org.eclipse.edc.autodoc.gradle.plugin:<VERSION>>")

}

}

Please note that the repositories configuration can be omitted, if the release version of the plugin is used or if used

in conjunction with the edc-build plugin.

3.1.2. Apply the plugin to the project:

There are two options to apply a plugin. For multi-module builds this should be done at the root level.

- via

plugin block:plugins {

id("org.eclipse.edc.autodoc")

}

- using the iterative approach, useful when applying to

allprojects or subprojects:subprojects{

apply(plugin = "org.eclipse.edc.autodoc")

}

The autodoc plugin exposes the following configuration values:

- the

processorVersion: tells the plugin, which version of the annotation processor module to use. Set this value if

the version of the plugin and of the annotation processor diverge. If this is omitted, the plugin will use its own

version. Please enter just the SemVer-compliant version string, no groupId or artifactName are needed.configure<org.eclipse.edc.plugins.autodoc.AutodocExtension> {

processorVersion.set("<VERSION>")

}

The plugin will then generate an edc.json file for every module/gradle project.

3.2. Merging the manifests

There is a Gradle task readily available to merge all the manifests into one large manifest.json file. This comes in

handy when the JSON manifest is to be converted into other formats, such as Markdown, HTML, etc.

To do that, execute the following command on a shell:

By default, the merged manifests are saved to <rootProject>/build/manifest.json. This destination file can be

configured using a task property:

// delete the merged manifest before the first merge task runs

tasks.withType<MergeManifestsTask> {

destinationFile = YOUR_MANIFEST_FILE

}

Be aware that due to the multithreaded nature of the merger task, every subproject’s edc.json gets appended to the

destination file, so it is a good idea to delete that file before running the mergeManifest task. Gradle can take care

of that for you though:

// delete the merged manifest before the first merge task runs

rootProject.tasks.withType<MergeManifestsTask> {

doFirst { YOUR_MANIFEST_FILE.delete() }

}

3.3. Rendering manifest files as Markdown or HTML

Manifests get created as JSON, which may not be ideal for end-user consumption. To convert them to HTML or Markdown,

execute the following Gradle task:

./gradlew doc2md # or doc2html

this looks for manifest files and convert them all to either Markdown (doc2md) or static HTML (doc2html). Note that

if merged the manifests before (mergeManifests), then the merged manifest file gets converted too.

The resulting *.md or *.html files are located next to the edc.json file in <module-path>/build/.

3.4. Using published manifest files (MavenCentral)

Manifest files (edc.json) are published alongside the binary jar files, sources jar and javadoc jar to MavenCentral

for easy consumption by client projects. The manifest is published using type=json and classifier=manifest

properties.

Client projects that want to download manifest files (e.g. for rendering static web content), simply define a Gradle

dependency like this (kotlin DSL):

implementation("org.eclipse.edc:<ARTIFACT>:<VERSION>:manifest@json")

For example, for the :core:control-plane:control-plane-core module in version 0.4.2-SNAPSHOT, this would be:

implementation("org.eclipse.edc:control-plane-core:0.4.2-SNAPSHOT:manifest@json")

When the dependency gets resolved, the manifest file will get downloaded to the local gradle cache, typically located at

.gradle/caches/modules-2/files-2.1. So in the example the manifest would get downloaded at

~/.gradle/caches/modules-2/files-2.1/org.eclipse.edc/control-plane-core/0.4.2-SNAPSHOT/<HASH>/control-plane-core-0.4.2-SNAPSHOT-manifest.json

3 - OpenApi spec

It is possible to generate an OpenApi spec in the form of a *.yaml file by invoking two simple Gradle tasks.

Generate *.yaml files

Every module (=subproject) that contains REST endpoints is scanned for Jakarta Annotations which are then used to

generate a *.yaml specification for that particular module. This means that there is one *.yamlfile per module,

resulting in several *.yaml files.

Those files are named MODULENAME.yaml, e.g. observability.yaml or control.yaml.

To re-generate those files, simply invoke

This will generate all *.yaml files in the resources/openapi/yaml directory.

Gradle Plugins

We use the official Swagger Gradle plugins:

"io.swagger.core.v3.swagger-gradle-plugin": used to generate a *.yaml file per module

So in order for a module to be picked up by the Swagger Gradle plugin, simply add it to the build.gradle.kts:

// in yourModule/build.gradle.kts

val rsApi: String by project

plugins {

`java-library`

id(libs.plugins.swagger.get().pluginId) //<-- add this

}

Categorizing your API

All APIs in EDC should be “categorized”, i.e. they should belong to a certain group of APIs.

Please see this decision record

for reference. In order to add your module to one of the categories, simply add this block to your module’s build.gradle.kts:

plugins {

`java-library`

id(libs.plugins.swagger.get().pluginId)

}

dependencies {

// ...

}

// add this block:

edcBuild {

swagger {

apiGroup.set("management-api")

}

}

This tells the build plugin how to categorize your API and SwaggerHub will list it accordingly.

Note: currently we have categories for control-api and management-api

How to generate code

This feature does neither expose the generated files through a REST endpoint providing any sort of live try-out

feature, nor does it generate any sort of client code. A visual documentation page for our APIs is served

through SwaggerHub.

However, there is Gradle plugin capable of generating client code.

Please refer to the official documentation.

4 - Data Persistence with PostgreSQL

By default, the in-memory stores are provided by the dependency injection, the sql implementations can be used by

simply registering the relative extensions (e.g. asset-index-sql, contract-negotiation-store-sql, …).

1. Configuring DataSources

For using sql extensions, a DataSource is needed, and it should be registered on the DataSourceRegistry service.

The sql-pool-apache-commons extension is responsible for creating and registering pooled data sources starting from

configuration. At least one data source named "default" is required.

edc.datasource.default.url=...

edc.datasource.default.name=...

edc.datasource.default.password=...

It is recommended to hold these values in the Vault rather than in configuration. The config key (e.g.

edc.datasource.default.url) serves as secret alias. If no vault entries are found for these keys, they will be

obtained from the configuration. This is unsafe and should be avoided!

Other datasources can be defined using the same settings structure:

edc.datasource.<datasource-name>.url=...

edc.datasource.<datasource-name>.name=...

edc.datasource.<datasource-name>.password=...

<datasource-name> is string that then can be used by the store’s configuration to use specific data sources.

1.2 Using custom datasource in stores

Using a custom datasource in a store can be done by configuring the setting:

edc.sql.store.<store-context>.datasource=<datasource-name>

Note that <store-context> can be an arbitrary string, but it is recommended to use a descriptive name. For example,

the SqlPolicyStoreExtension defines a data source name as follows:

@Extension("SQL policy store")

public class SqlPolicyStoreExtension implements ServiceExtension {

@Setting(value = "The datasource to be used", defaultValue = DataSourceRegistry.DEFAULT_DATASOURCE)

public static final String DATASOURCE_NAME = "edc.sql.store.policy.datasource";

@Override

public void initialize(ServiceExtensionContext context) {

var datasourceName = context.getConfig().getString(DATASOURCE_NAME, DataSourceRegistry.DEFAULT_DATASOURCE);

//...

}

}

2. SQL Statement abstraction

EDC does not use any sort of Object-Relation-Mapper (ORM), which would automatically translate Java object graphs to SQL

statements. Instead, EDC uses pre-canned parameterized SQL statements.

We typically distinguish between literals such as table names or column names and “templates”, which are SQL statements

such as INSERT.

Both are declared as getters in an interface that extends the SqlStatements interface, with literals being default methods and templates being implemented by a BaseSqlDialectStatements class.

A simple example could look like this:

public class BaseSqlDialectStatements implements SomeEntityStatements {

@Override

public String getDeleteByIdTemplate() {

return executeStatement().delete(getSomeEntityTable(), getIdColumn());

}

@Override

public String getUpdateTemplate() {

return executeStatement()

.column(getIdColumn())

.column(getSomeStringFieldColumn())

.column(getCreatedAtColumn())

.update(getSomeEntityTable(), getIdColumn());

}

//...

}

Note that the example makes use of the SqlExecuteStatement utility class, which should be used to construct all SQL

statements - except queries. Queries are special in that they have a highly dynamic aspect to them. For more

information, please read on in this chapter.

As a general rule of thumb, issuing multiple statements (within one transaction) should be preferred over writing

complex nested statements. It is very easy to inadvertently create an inefficient or wasteful statement that causes high

resource load on the database server. The latency that is introduced by sending multiple statements to the DB server is

likely negligible in comparison, especially because EDC is architected towards reliability rather than latency.

3. Querying PostgreSQL databases

Generally speaking, the basis for all queries is a QuerySpec object. This means, that at some point a QuerySpec must

be translated into an SQL SELECT statement. The place to do this is the SqlStatements implementation often called

BaseSqlDialectStatements:

@Override

public SqlQueryStatement createQuery(QuerySpec querySpec) {

var select = "SELECT * FROM %s".formatted(getSomeEntityTable());

return new SqlQueryStatement(select, querySpec, new SomeEntityMapping(this), operatorTranslator);

}

Now, there are a few things to unpack here:

- the

SELECT statement serves as starting point for the query - individual

WHERE clauses get added by parsing the filterExpression property of the QuerySpec LIMIT and OFFSET clauses get appended based on QuerySpec#offset and QuerySpec#limit- the

SomeEntityMapping maps the canonical form onto the SQL literals - the

operatorTranslator is used to convert operators such as = or like into SQL operators

Theoretically it is possible to map every schema onto every other schema, given that they are of equal cardinality. To

achieve that, EDC introduces the notion of a canonical form, which is our internal working schema for entities. In

other words, this is the schema in which objects are represented internally. If we ever support a wider variety of

translation and transformation paths, everything would have to be transformed into that canonical format first.

In actuality the canonical form of an object is defined by the Java class and its field names. For instance, a query

for contract negotiations must be specified using the field names of a ContractNegotiation object:

public class ContractNegotiation {

// ...

private ContractAgreement contractAgreement;

// ...

}

public class ContractAgreement {

// ...

private final String assetId;

}

Consequently, contractAgreement.assetId would be valid, whereas contract_agreement.asset_id would be invalid. Or,

the left-hand operand looks like as if we were traversing the Java object graph. This is what we call the canonical

form . Note the omission of the root object contractNegotiation!

3.1 Translation Mappings

Translation mappings are EDCs way to map a QuerySpec to SQL statements. At its core, it contains a Map that contains

the Java entity field name and the related SQL column name.

In order to decouple the canonical form from the SQL schema (or any other database schema), a mapping scheme exists to

map the canonical model onto the SQL model. This TranslationMapping is essentially a graph-like metamodel of the

entities: every Java entity has a related mapping class that contains its field names and the associated SQL column

names. The convention is to append *Mapping to the class name, e.g. PolicyDefinitionMapping.

3.1.1 Mapping primitive fields

Primitive fields are stored directly as columns in SQL tables. Thus, mapping primitive data types is trivial: a simple

mapping from one onto the other is necessary, for example, ContractNegotiation.counterPartyAddress would be

represented in the ContractNegotiationMappin as an entry

"counterPartyAddress"->"counterparty_address"

When constructing WHERE/AND clauses, the canonical property is simply be replaced by the respective SQL column name.

3.1.2 Mapping complex objects

For fields that are of complex type, such as the ContractNegotiation.contractAgreement field, it is necessary to

accommodate this, depending on how the relational data model is defined. There are two basic variants we use:

Option 1: using foreign keys

In this case, the referenced object is stored in a separate table using a foreign key relation. Thus, the canonical

property (contractAgreement) is mapped onto the SQL schema using another *Mapping class. Here, this would be the

ContractAgreementMapping. When resolving a property in the canonical format (contractAgreement.assetId), this means

we must recursively descend into the model graph and resolve the correct SQL expression.

Note: mapping one-to-many relations (= arrays/lists) with foreign keys is not implemented at this time.

Option 2a: encoding the object

Another popular way to store complex objects is to encode them in JSON and store them in a VARCHAR column. In

PostgreSQL we use the specific JSON type instead of VARCHAR. For example, the TranferProcess is stored in a table

called edc_transfer_process, its DataAddress property is encoded in JSON and stored in a JSON field.

Querying for TransferProcess objects: when mapping the filter expression

contentDataAddress.properties.somekey=somevalue, the contentDataAddress is represented as JSON, therefore in the

TransferProcessMapping the contentDataAddress field maps to a JsonFieldTranslator:

public TransferProcessMapping(TransferProcessStoreStatements statements) {

// ...

add(FIELD_CONTENTDATAADDRESS, new JsonFieldTranslator(statements.getContentDataAddressColumn()));

// ...

}

which would then get translated to:

SELECT *

FROM edc_transfer_process

-- omit LEFT OUTER JOIN for readability

WHERE content_data_address -> 'properties' ->> 'somekey' = 'somevalue'

Note that JSON queries are specific to PostgreSQL and are not portable to other database technologies!

Option 2b: encoding lists/arrays

Like accessing objects, accessing lists/arrays of objects is possible using special JSON operators. In this case the

special Postgres function json_array_elements() is used. Please refer to the official

documentation.

For an example of how this is done, please look at how the TransferProcessMapping maps a ResourceManifest, which in

turn contains a List<ResourceDefinition> using the ResourceManifestMapping.

5 - Logging

A comprehensive and consistent way of logging is a crucial pillar for operability. Therefore, the following rules should be followed:

Logging component

Logs must only be produced using the Monitor service, which offers 4 different log levels:

severe

Error events that might lead the application to abort or still allow it to continue running.

Used in case of an unexpected interruption of the flow or when something is broken, i.e. an operator has to take action.

e.g. service crashes, database in illegal state, … even if there is chance of self recovery.

warning

Potentially harmful situations messages.

Used in case of an expected event that does not interrupt the flow but that should be taken into consideration.

info

Informational messages that highlight the progress of the application at coarse-grained level.

Used to describe the normal flow of the application.

debug

Fine-grained informational events that are most useful to debug an application.

Used to describe details of the normal flow that are not interesting for a production environment.

What should be logged

- every exception with

severe or warning - every

Result object evaluated as failed:- with

severe if this is something that interrupts the flow and someone should take care of immediately - with

warning if this is something that doesn’t interrupt the flow but someone should take care of, because it could give worse results in the future

- every important message that’s not an error with

info - other informative events like incoming calls at the API layer or state changes with

debug

What should be not logged

- secrets and any other potentially sensitive data, like the payload that is passed through the

data-plane - an exception that will be thrown in the same block

- not strictly necessary information, like “entering method X”, “leaving block Y”, “returning HTTP 200”

6 - Writing tests

1. Adding EDC test fixtures

To add EDC test utilities and test fixtures to downstream projects, simply add the following Gradle dependency:

testImplementation("org.eclipse.edc:junit:<version>")

2. Controlling test verbosity

To run tests verbosely (displaying test events and output and error streams to the console), use the following system

property:

./gradlew test -PverboseTest

3. Definition and distinction

- unit tests test one single class by stubbing or mocking dependencies.

- integration test tests one particular aspect of a software, which may involve external

systems.

- system tests are end-to-end tests that rely on the entire system to be present.

3.1 Coding Guidelines

The EDC codebase has few annotations and these annotation focuses on two important aspects:

- Exclude integration tests by default from JUnit test runner, as these tests relies on external systems which might not

be available during a local execution.

- Categorize integration tests with help of JUnit

Tags.

Following are some available annotations:

@IntegrationTest: Marks an integration test with IntegrationTest Junit tag. This is the default tag and can be

used if you do not want to specify any other tags on your test to do further categorization.

Below annotations are used to categorize integration tests based on the runtime components that must be available for

the test to run. All of these annotations are composite annotations and contains @IntegrationTest annotation as well.

@ApiTest: marks an integration test that focuses on testing a REST API. To do that, a runtime the controller class

with all its collaborators is spun up.@EndToEndTest: Marks an integration test with EndToEndTest Junit Tag. This should be used when entire system is- involved in a test.

@ComponentTest: Marks an integration test with ComponentTest Junit Tag. This should be used when the test does not

use any external systems, but uses actual collaborator objects instead of mocks.- there are other more specific tags for cloud-vendor specific environments, like

@AzureStorageIntegrationTest or

@AwsS3IntegrationTest. Some of those environments can be emulated (with test containers), others can’t.

We encourage you to use these available annotation but if your integration test does not fit in one of these available

annotations, and you want to categorize them based on their technologies then feel free to create a new annotations but

make sure to use composite annotations which contains @IntegrationTest. If you do not wish to categorize based on

their technologies then you can use already available @IntegrationTest annotation.

- By default, JUnit test runner ignores all integration tests because in root

build.gradle.kts file we have excluded

all tests marked with IntegrationTest Junit tag. - If your integration test does not rely on an external system then you may not want to use above-mentioned annotations.

All integration tests should specify annotation to categorize them and the "...IntegrationTest" postfix to distinguish

them clearly from unit tests. They should reside in the same package as unit tests because all tests should maintain

package consistency to their test subject.

Any credentials, secrets, passwords, etc. that are required by the integration tests should be passed in using

environment variables. A good way to access them is ConfigurationFunctions.propOrEnv() because then the credentials

can also be supplied via system properties.

There is no one-size-fits-all guideline whether to perform setup tasks in the @BeforeAll or @BeforeEach, it will

depend on the concrete system you’re using. As a general rule of thumb long-running one-time setup should be done in the

@BeforeAll so as not to extend the run-time of the test unnecessarily. In contrast, in most cases it is not

advisable to deploy/provision the external system itself in either one of those methods. In other words, manually

provisioning a cloud resource should generally be avoided, because it will introduce code that has nothing to do with

the test and may cause security problems.

If possible all external system should be deployed using Testcontainers. Alternatively,

in special situations there might be a dedicated test instance running continuously, e.g. a cloud-based database test

instance. In the latter case please be careful to avoid conflicts (e.g. database names) when multiple test runners

access that system simultaneously and to properly clean up any residue before and after the test.

4. Integration Tests

4.1 TL;DR

Use integration tests only when necessary, keep them concise, implement them in a defensive manner using timeouts and

randomized names, use test containers for external systems wherever possible. This increases portability.

4.2 When to use them

Generally speaking developers should favor writing unit tests over integration tests, because they are simpler, more

stable and typically run faster. Sometimes that is not (easily) possible, especially when an implementation relies on an

external system that is not easily mocked or stubbed such as databases.

Therefore, in many cases writing unit tests is more involved that writing an integration test, for example say you want

to test your implementation of a Postgres-backed database. You would have to mock the behaviour of the PostgreSQL

database, which - while certainly possible - can get complicated pretty quickly. You might still choose to do that for

simpler scenarios, but eventually you will probably want to write an integration test that uses an actual PostgreSQL

instance.

4.4 Running integration tests locally

As mentioned above the JUnit runner won’t pick up integration tests unless a tag is provided. For example to run Azure CosmosDB integration tests pass includeTags parameter with tag value to the gradlew command:

./gradlew test -p path/to/module -DincludeTags="PostgresqlIntegrationTest"

running all tests (unit & integration) can be achieved by passing the runAllTests=true parameter to the gradlew

command:

./gradlew test -DrunAllTests="true"

4.5 Running them in the CI pipeline

All integration tests should go into the verify.yaml workflow, every “technology”

should

have its own job, and technology specific tests can be targeted using Junit tags with -DincludeTags property as

described above in document.

A GitHub composite action was created to

encapsulate the tasks of setting up Java/Gradle and running tests.

For example let’s assume we’ve implemented a PostgreSQL-based store for SomeObject, and let’s assume that the

verify.yaml already contains a “Postgres” job, then every module that contains a test class annotated with

@PostgresqlIntegrationTest will be loaded and executed here. This tagging will be used by the CI pipeline step to

target and execute the integration tests related to Postgres.

Let’s also make sure that the code is checked out before and integration tests only run on the upstream repo.

jobs:

Postgres-Integration-Tests:

# run only on upstream repo

if: github.repository_owner == 'eclipse-edc'

runs-on: ubuntu-latest

# taken from https://docs.github.com/en/actions/using-containerized-services/creating-postgresql-service-containers

services:

# Label used to access the service container

postgres:

# Docker Hub image

image: postgres

# Provide the password for postgres

env:

POSTGRES_PASSWORD: ${{ secrets.POSTGRES_PASSWORD }}

steps:

- uses: ./.github/actions/setup-build

- name: Postgres Tests

uses: ./.github/actions/run-tests

with:

command: ./gradlew test -DincludeTags="PostgresIntegrationTest"

[ ... ]

4.6 Do’s and Don’ts

DO:

- aim to cover as many test cases with unit tests as possible

- use integration tests sparingly and only when unit tests are not practical

- deploy the external system test container if possible, or

- use a dedicated always-on test instance (esp. cloud resources)

- take into account that external systems might experience transient failures or have degraded performance, so test

methods should have a timeout so as not to block the runner indefinitely.

- use randomized strings for things like database/table/bucket/container names, etc., especially when the external

system does not get destroyed after the test.

DO NOT:

- try to cover everything with integration tests. It’s typically a code smell if there are no corresponding unit tests

for an integration test.

- slip into a habit of testing the external system rather than your usage of it

- store secrets directly in the code. GitHub will warn about that.

- perform complex external system setup in

@BeforeEach or @BeforeAll - add production code that is only ever used from tests. A typical smell are

protected or package-private methods.

5. System tests

System tests are test in which an EDC runtime runs in the JUnit process.

To benefit from some fixtures that targets the management api, a

test fixtures module is available

it can be added as a dependency:

testImplementation(testFixtures("org.eclipse.edc:management-api-test-fixtures:<version>"))

5.1 Running an EDC instance from a JUnit test

In some circumstances it is necessary to launch an EDC runtime and execute tests against it. This could be a

fully-fledged connector runtime, replete with persistence and all bells and whistles, or this could be a partial runtime

that contains lots of mocks and stubs. One prominent example of this is API tests. At some point, you’ll want to run

REST requests using a HTTP client against the actual EDC runtime, using JSON-LD expansion, transformation etc. and

real database infrastructure.

EDC provides a nifty way to launch any runtime from within the JUnit process, which makes it easy to configure and debug

not only the actual test code, but also the system-under-test, i.e. the runtime.

To do that, two parts are needed:

- a runner: a module that contains the test logic

- one or several runtimes: one or more modules that define a standalone runtime (e.g. a runnable EDC definition)

The runner can load an EDC runtime by using the @RegisterExtension annotation:

@EndToEndTest

class YourEndToEndTest {

@RegisterExtension

private final RuntimeExtension controlPlane = new RuntimePerClassExtension(new EmbeddedRuntime(

"control-plane", // the runtime's name, used for log output

// all modules to be put on the runtime classpath

":core:common:connector-core",

":core:control-plane:control-plane-core",

":core:data-plane-selector:data-plane-selector-core",

":extensions:control-plane:transfer:transfer-data-plane-signaling",

":extensions:common:iam:iam-mock",

":extensions:common:http",

":extensions:common:api:control-api-configuration")

// the runtime configuration is passed through a lazy provider

.configurationProvider(() -> ConfigFactory.fromMap(Map.of(

"web.http.control.port", String.valueOf(getFreePort()),

"web.http.control.path", "/control"))

)

);

}

This example will launch a runtime called "control-plane", add the listed Gradle modules to its classpath and pass the

configuration as map to it. And it does that from within the JUnit process, so the "control-plane" runtime can be

debugged from the IDE.

The example above will initialize and start the runtime once, before all tests run (hence the name

“RuntimePerClassExtension”). Alternatively, there is the RuntimePerMethodExtension which will re-initialize and

start the runtime before every test method.

In most use cases, RuntimePerClassExtension is preferable, because it avoids having to start the runtime on every

test. There are cases, where the RuntimePerMethodExtension is useful, for example when the runtime is mutated during

tests and cleaning up data stores is not practical. Be aware of the added test execution time penalty though.

To make sure that the runtime extensions are correctly built and available, they need to be set as dependency of the

runner module as testCompileOnly.

This ensures proper dependency isolation between runtimes (very important the test need to run two different components

like a control plane and a data plane).

Technically, the number of runtimes launched that way is not limited (other than by host system resource), so

theoretically, an entire dataspace with N participants could be launched that way…

5.2 Solve potential port conflict issues with Testcontainers

Using Testcontainers in the system tests could lead to port conflicts, especially if the ports generated randomly to be

used in the EDC runtimes are created statically before the Testcontainers instances are spin up.

To solve this problem it’s good to follow the “lazy configuration” practice:

- generate the random ports in the

configurationProvider that’s passed to the EmbeddedRuntime, it will ensure that

the port number is assigned lazily right before the runtime startup - if a port is also needed to be configured in another runtime (e.g. to permit communication on that port by the other

runtime, it can be instantiated lazily using the

LazySupplier as shown in the example:

@EndToEndTest

class YourEndToEndTest {

private final LazySupplier<Integer> port = new LazySupplier<>(Ports::getFreePort);

@RegisterExtension

private final RuntimeExtension connector = new RuntimePerClassExtension(new EmbeddedRuntime(

"connector",

":runtime")

.configurationProvider(() -> ConfigFactory.fromMap(Map.of(

// port will be lazily instantiated here...

"web.http.port", String.valueOf(port.get())

))

)

);

@RegisterExtension

private final RuntimeExtension anotherConnector = new RuntimePerClassExtension(new EmbeddedRuntime(

"connector",

":runtime")

.configurationProvider(() -> ConfigFactory.fromMap(Map.of(

// ... or it could be instantiated lazily here!

"connector.port", String.valueOf(port.get())

))

)

);

}

7 - Runtime

7.1 - Json LD

Here is a simple example taken from json-ld.org

{

"@context": "https://json-ld.org/contexts/person.jsonld",

"@id": "http://dbpedia.org/resource/John_Lennon",

"name": "John Lennon",

"born": "1940-10-09",

"spouse": "http://dbpedia.org/resource/Cynthia_Lennon"

}

It’s similar on how a Person would be represented in JSON, with additional known properties such as @context and

@id.

The @id is used to uniquely identify an object.

The @context is used to define how terms should be interpreted and help

expressing specific identifier with short-hand names instead

of IRI.

Exhausting reserved keywords list and their meaning is

available here

In the above example the @context is a remote one, but the @context can also be defined inline. Here is the same

JSON-LD object using locally defined terms.

{

"@context": {

"xsd": "http://www.w3.org/2001/XMLSchema#",

"name": "http://xmlns.com/foaf/0.1/name",

"born": {

"@id": "http://schema.org/birthDate",

"@type": "xsd:date"

},

"spouse": {

"@id": "http://schema.org/spouse",

"@type": "@id"

}

},

"@id": "http://dbpedia.org/resource/John_Lennon",

"name": "John Lennon",

"born": "1940-10-09",

"spouse": "http://dbpedia.org/resource/Cynthia_Lennon"

}

which defines inline the name, born and spouse terms.

The two objects have the same meaning as Linked Data.

A JSON-LD document can be described in multiple forms and by applying

certain transformations a document can change shape without changing the meaning.

Relevant forms in the realm of EDC are:

- Expanded document form

- Compacted document form

The examples above are in compacted form and by applying

the expansion algorithm the output would look like this

[

{

"@id": "http://dbpedia.org/resource/John_Lennon",

"http://schema.org/birthDate": [

{

"@type": "http://www.w3.org/2001/XMLSchema#date",

"@value": "1940-10-09"

}

],

"http://xmlns.com/foaf/0.1/name": [

{

"@value": "John Lennon"

}

],

"http://schema.org/spouse": [

{

"@id": "http://dbpedia.org/resource/Cynthia_Lennon"

}

]

}

]

The expansion is the process of taking in input a JSON-LD

document and applying the @context so that it is no longer necessary, as all the terms are resolved in their IRI

representation.

The compaction is the inverse process. It takes in input a

JSON-LD in expanded form and by applying the supplied @context, it creates the compacted form.

For playing around JSON-LD and processing algorithm the playground is a useful tool.

1. JSON-LD in EDC

EDC uses JSON-LD as primary serialization format at API layer and at runtime EDC manages the objects in their expanded

form, for example when transforming JsonObject into EDC entities and and backwards

in transformers or when validating input

JsonObject at API level.

Extensible properties in entities are always stored expanded form.

To achieve that, EDC uses an interceptor (JerseyJsonLdInterceptor) that always expands in ingress and compacts in

egress the JsonObject.

EDC uses JSON-LD for two main reasons:

Fist EDC embraces different protocols and standards such as:

and they all rely on JSON-LD as serialization format.

The second reason is that EDC allows to extends entities like Asset with custom properties, and uses JSON-LD as the

way to extend objects with custom namespaces.

EDC handles JSON-LD through the JsonLd SPI. It supports different operation and configuration for managing JSON-LD in

the EDC runtime.

It supports expansion and compaction process:

Result<JsonObject> expand(JsonObject json);

Result<JsonObject> compact(JsonObject json, String scope);

and allows the configuration of which @context and namespaces to use when processing the JSON-LD in a specific

scope.

For example when using the JsonLd service in the management API the @context and namespaces configured might

differs when using the same service in the dsp layer.

The JsonLd service also can configure cached contexts by allowing to have a local copy of the remote context. This

limits the network request required when processing the JSON-LD and reduces the attack surface if the remote host of the

context is compromised.

By default EDC make usage of @vocab for processing input/output

JSON-LD document. This can provide a default vocabulary for extensible properties. An on-going initiative is available

with

this extension

in order to provide a cached terms mapping (context) for EDC management API. The remote context definition is

available here.

Implementors that need additional @context and namespaces to be supported in EDC runtime, should develop a custom

extension that registers the required @context and namespace.

For example let’s say we want to support a custom namespace http://w3id.org/starwars/v0.0.1/ns/ in the extensible

properties of an Asset.

The input JSON would look like this:

{

"@context": {

"@vocab": "https://w3id.org/edc/v0.0.1/ns/",

"sw": "http://w3id.org/starwars/v0.0.1/ns/"

},

"@type": "Asset",

"@id": "79d9c360-476b-47e8-8925-0ffbeba5aec2",

"properties": {

"sw:faction": "Galactic Imperium",

"sw:person": {

"sw:name": "Darth Vader",

"sw:webpage": "https://death.star"

}

},

"dataAddress": {

"@type": "DataAddress",

"type": "myType"

}

}

Even if we don’t register a any additional @context or namespace prefix in the EDC runtime,

the Asset will still be persisted correctly since the JSON-LD gets expanded correctly and

stored in the expanded form.

But in the egress the JSON-LD document gets always compacted, and without additional configuration, it will look like

this:

{

"@id": "79d9c360-476b-47e8-8925-0ffbeba5aec2",

"@type": "Asset",

"properties": {

"http://w3id.org/starwars/v0.0.1/ns/faction": "Galactic Imperium",

"http://w3id.org/starwars/v0.0.1/ns/person": {

"http://w3id.org/starwars/v0.0.1/ns/name": "Darth Vader",

"http://w3id.org/starwars/v0.0.1/ns/webpage": "https://death.star"

},

"id": "79d9c360-476b-47e8-8925-0ffbeba5aec2"

},

"dataAddress": {

"@type": "DataAddress",

"type": "myType"

},

"@context": {

"@vocab": "https://w3id.org/edc/v0.0.1/ns/",

"edc": "https://w3id.org/edc/v0.0.1/ns/",

"odrl": "http://www.w3.org/ns/odrl/2/"

}

}

That means that the IRIs are not shortened to terms

or compact iri. This might be ok for some runtime and configuration. But if implementors want to

achieve more usability and easy of usage, two main strategy can be applied:

1.1 Compact IRI

The first strategy is to register a namespace prefix in an extension:

public class MyExtension implements ServiceExtension {

@Inject

private JsonLd jsonLd;

@Override

public void initialize(ServiceExtensionContext context) {

jsonLd.registerNamespace("sw", "http://w3id.org/starwars/v0.0.1/ns/", "MANAGEMENT_API");

}

}

This will shorten the IRI to compact IRI when compacting the same

JSON-LD:

{

"@id": "79d9c360-476b-47e8-8925-0ffbeba5aec2",

"@type": "Asset",

"properties": {

"sw:faction": "Galactic Imperium",

"sw:person": {

"sw:name": "Darth Vader",

"sw:webpage": "https://death.star"

},

"id": "79d9c360-476b-47e8-8925-0ffbeba5aec2"

},

"dataAddress": {

"@type": "DataAddress",

"type": "myType"

},

"@context": {

"@vocab": "https://w3id.org/edc/v0.0.1/ns/",

"edc": "https://w3id.org/edc/v0.0.1/ns/",

"odrl": "http://www.w3.org/ns/odrl/2/",

"sw": "http://w3id.org/starwars/v0.0.1/ns/"

}

}

1.2 Custom Remote Context

An improved version requires developers to draft a context (which should be resolvable with an URL), for example

http://w3id.org/starwars/context.jsonld, that contains the terms definition.

An example of a definition might look like this:

{

"@context": {

"@version": 1.1,

"sw": "http://w3id.org/starwars/v0.0.1/ns/",

"person": "sw:person",

"faction": "sw:faction",

"name": "sw:name",

"webpage": "sw:name"

}

}

Then in a an extension the context URL should be registered in the desired scope and cached:

public class MyExtension implements ServiceExtension {

@Inject

private JsonLd jsonLd;

@Override

public void initialize(ServiceExtensionContext context) {

jsonld.registerContext("http://w3id.org/starwars/context.jsonld", "MANAGEMENT_API");

URI documentLocation = // load from filesystem or classpath

jsonLdService.registerCachedDocument("http://w3id.org/starwars/context.jsonld", documentLocation)

}

}

With this configuration the JSON-LD will be representend without the sw prefix, since the terms mapping is defined in

the remote context http://w3id.org/starwars/context.jsonld:

{

"@id": "79d9c360-476b-47e8-8925-0ffbeba5aec2",

"@type": "Asset",

"properties": {

"faction": "Galactic Imperium",

"person": {

"name": "Darth Vader",

"webpage": "https://death.star"

},

"id": "79d9c360-476b-47e8-8925-0ffbeba5aec2"

},

"dataAddress": {

"@type": "DataAddress",

"type": "myType"

},

"@context": [

"http://w3id.org/starwars/context.jsonld",

{

"@vocab": "https://w3id.org/edc/v0.0.1/ns/",

"edc": "https://w3id.org/edc/v0.0.1/ns/",

"odrl": "http://www.w3.org/ns/odrl/2/"

}

]

}

In case of name clash in the terms definition, the JSON-LD processor should fallback to

the compact URI representation.

1.1 JSON-LD Validation

EDC provides a mechanism to validate JSON-LD objects. The validation phase is typically handled at the

network/controller layer. For each entity identified by it’s own @type, it is possible to register a custom

Validator<JsonObject> using the registry JsonObjectValidatorRegistry. By default EDC provides validation for all the

entities it manages like Asset, ContractDefinition ..etc.

For custom validator it is possible to either implements Validator<JsonObject> interface (not recommended) or

or use the bundled JsonObjectValidator, which is a declarative way of configuring a validator for an object through

the builder pattern. It also comes with a preset of validation rules such as id not empty, mandatory properties and many

more.

An example of validator for a custom type Foo:

{

"@context": {

"@vocab": "https://w3id.org/edc/v0.0.1/ns/",

"edc": "https://w3id.org/edc/v0.0.1/ns/"

},

"@id": "79d9c360-476b-47e8-8925-0ffbeba5aec2",

"@type": "Foo",

"bar": "value"

}

might look like this:

public class FooValidator {

public static JsonObjectValidator instance() {

return JsonObjectValidator.newValidator()

.verifyId(OptionalIdNotBlank::new)

.verify("https://w3id.org/edc/v0.0.1/ns/bar")

.build();

}

}

and can be registered with the @Injectable

JsonObjectValidatorRegistry:

public class MyExtension implements ServiceExtension {

@Inject

private JsonObjectValidatorRegistry validator;

@Override

public void initialize(ServiceExtensionContext context) {

validator.register("https://w3id.org/edc/v0.0.1/ns/Foo", FooValidator.instance());

}

}

When needed, it can be invoked like this:

public class MyController {

private JsonObjectValidatorRegistry validator;

@Override

public void doSomething(JsonObject input) {

validator.validate("https://w3id.org/edc/v0.0.1/ns/Foo", input)

.orElseThrow(ValidationFailureException::new);

}

}

7.2 - Programming Primitives

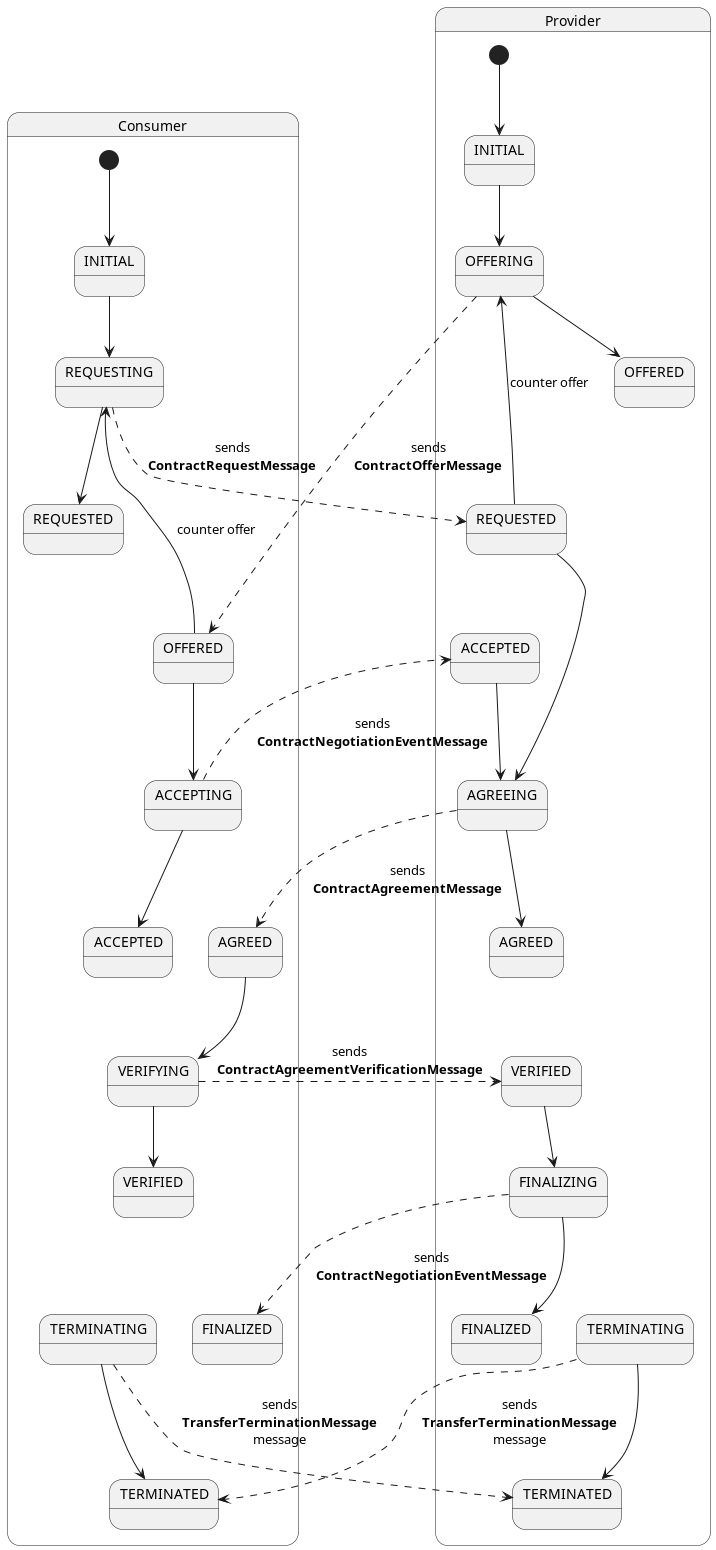

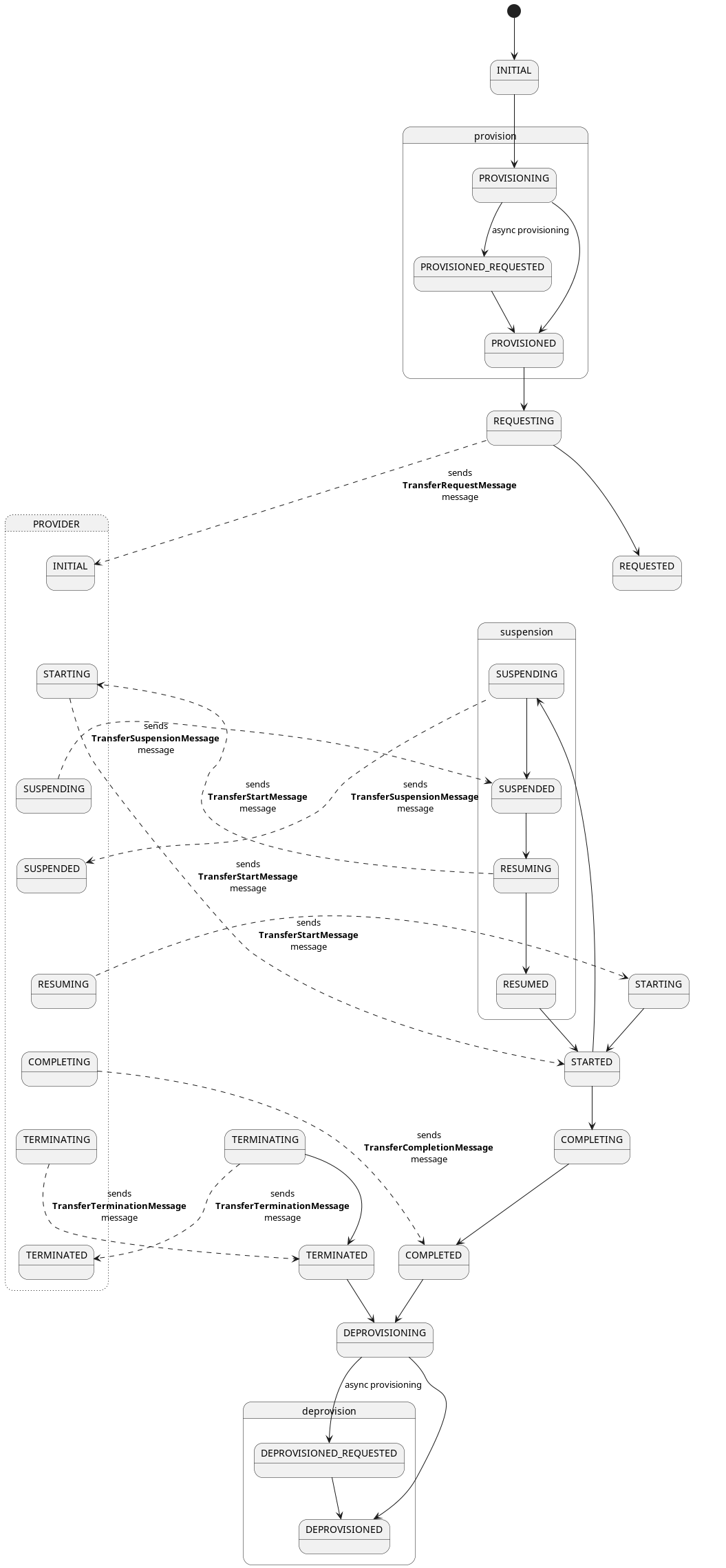

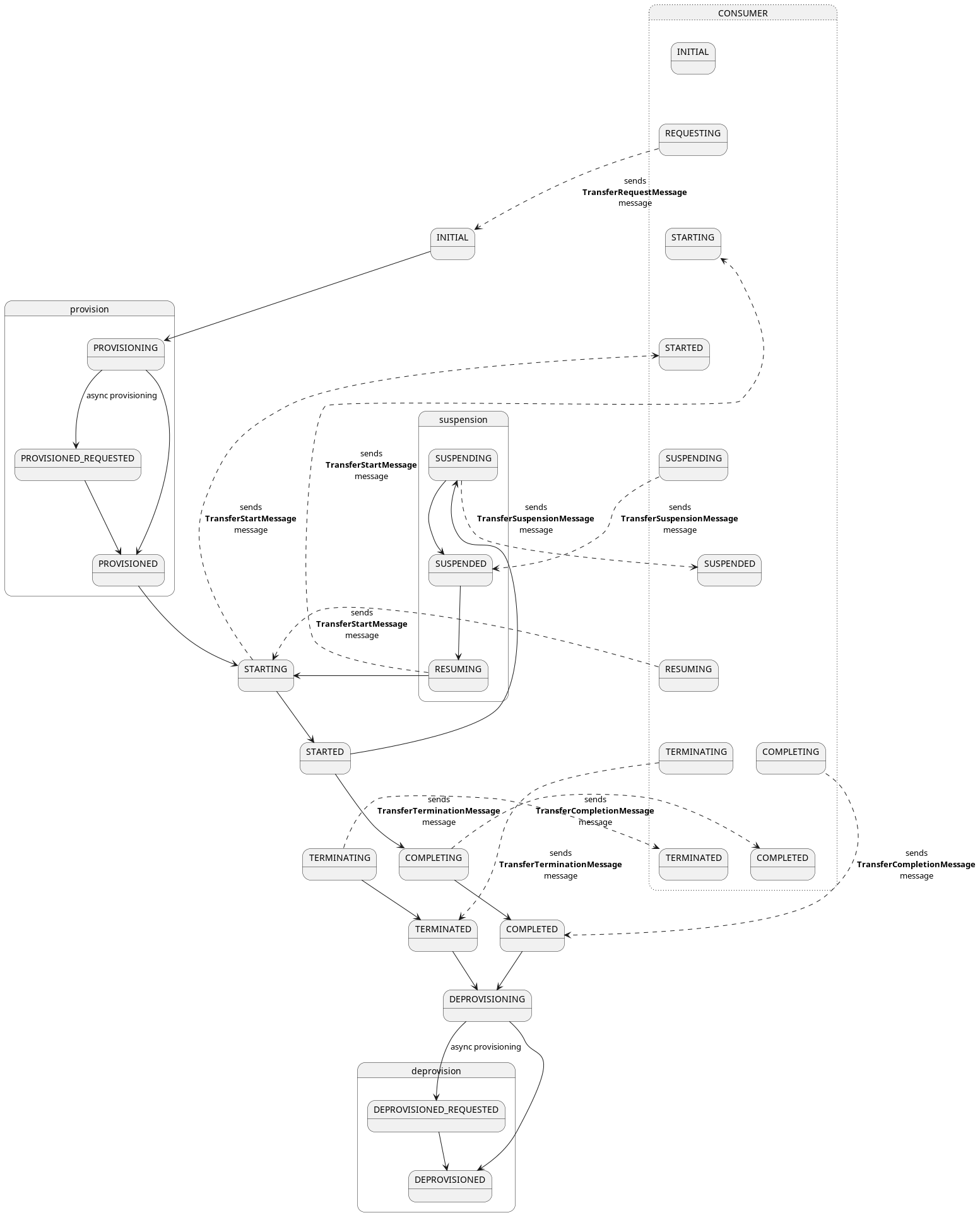

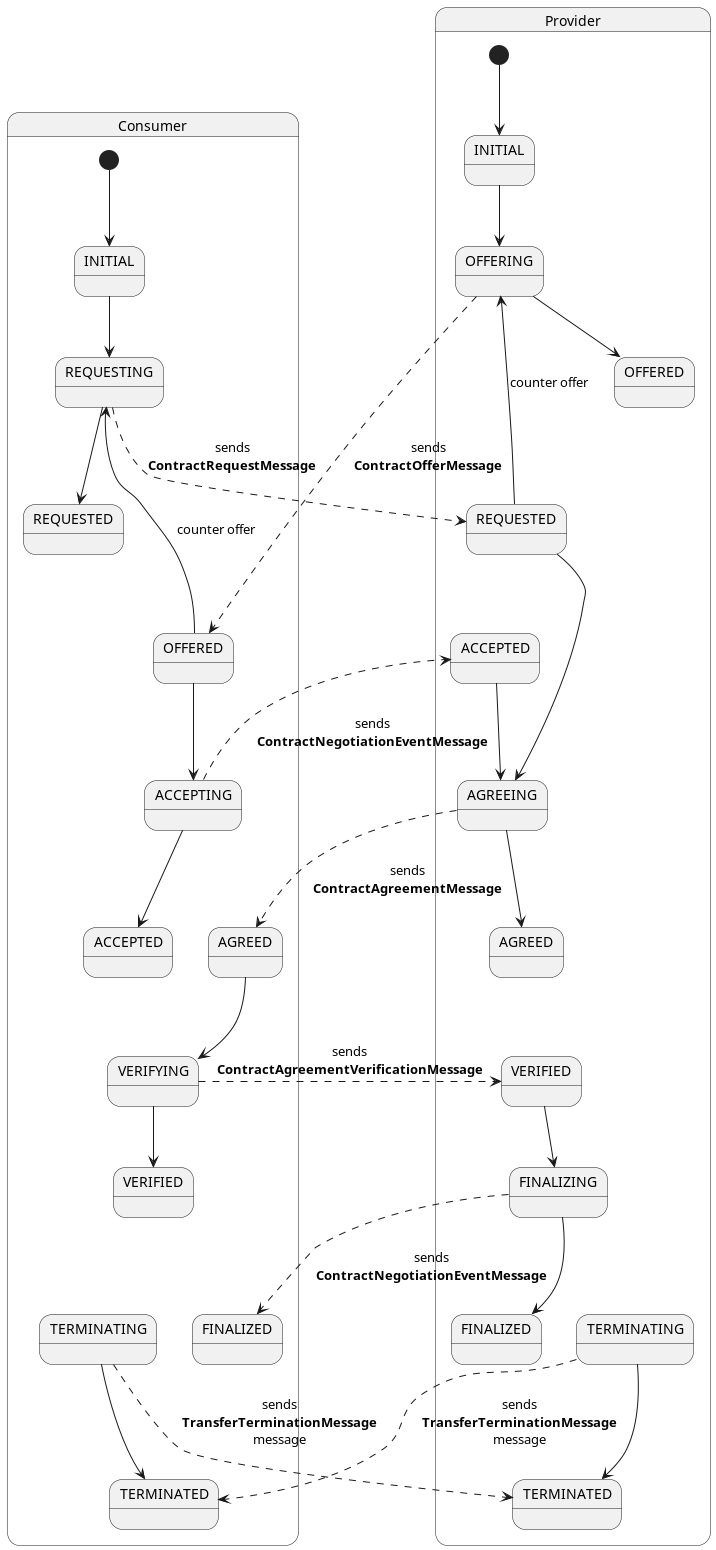

1 State machines

EDC is asynchronous by design, which means that processes are processed in such a way that they don’t block neither the

runtime nor the caller. For example starting a contract negotiation is a long-running process and every contract

negotiation has to traverse a series of

states,

most of which involve sending remote messages to the counter party. These state transitions are not guaranteed to happen

within a certain time frame, they could take hours or even days.

From that it follows that an EDC instance must be regarded as ephemeral (= they can’t hold state in memory), so the

state (of a contract negotiation) must be held in persistent storage. This makes it possible to start and stop connector

runtimes arbitrarily, and every replica picks up where the other left off, without causing conflicts or processing an

entity twice.

The state machine itself is synchronous: in every iteration it processes a number of objects and then either goes back

to sleep, if there was nothing to process, or continues right away.

At a high level this is implemented in the StateMachineManager, which uses a set of Processors. The

StateMachineManager sequentially invokes each Processor, who then reports the number of processed entities. In EDC’s

state machines, processors are functions who handle StatefulEntities in a particular state and are registered when the

application starts up:

// ProviderContractNegotiationManagerImpl.java

@Override

protected StateMachineManager.Builder configureStateMachineManager(StateMachineManager.Builder builder) {

return builder

.processor(processNegotiationsInState(OFFERING, this::processOffering))

.processor(processNegotiationsInState(REQUESTED, this::processRequested))

.processor(processNegotiationsInState(ACCEPTED, this::processAccepted))

.processor(processNegotiationsInState(AGREEING, this::processAgreeing))

.processor(processNegotiationsInState(VERIFIED, this::processVerified))

.processor(processNegotiationsInState(FINALIZING, this::processFinalizing))

.processor(processNegotiationsInState(TERMINATING, this::processTerminating));

}

This instantiates a Processor that binds a given state to a callback function. For example AGREEING ->

this::processAgreeing. When the StateMachineManager invokes this Processor, it loads all contract negotiations in

that state (here: AGREEING) and passes each one to the processAgreeing method.

All processors are invoked sequentially, because it is possible that one single entity transitions to multiple states in

the same iteration.

1.1 Batch-size, sorting and tick-over timeout

In every iteration the state machine loads multiple StatefulEntity objects from the database. To avoid overwhelming

the state machine and to prevent entites from becoming stale, two main safeguards are in place:

- batch-size: this is the maximum amount of entities per state that are fetched from the database

- sorting:

StatefulEntity objects are sorted based on when their state was last updated, oldest first. - iteration timeout: if no

StatefulEntities were processed, the statemachine simply yields for a configurable amount

of time.

1.2 Database-level locking

In production deployments the control plane is typically replicated over several instances for performance and

robustness. This must be considered when loading StatefulEntity objects from the database, because it is possible that

two replicas attempt to load the same entity at the same time, which - without locks - would lead to a race condition,

data inconsistencies, duplicated DSP messages and other problems.

To avoid this, EDC employs pessimistic exclusive locks on the database level for stateful entities, which are called

Lease. These are entries in a database that indicate whether an entity is currently leased, whether the lease is

expired and which replica leased the entity. Attempting to acquire a lease for an already-leased entity is only possible

if the

lease holder is the same.

Note that the value of the edc.runtime.id property is used to record the holder of a Lease. It is recommended not

to configure this property in clustered environments so that randomized runtime IDs (= default) are used.

Generally the process is as follows:

- load

N “leasable” entities and acquire a lease for each one. An entity is considered “leasable” if it is not already

leased, or the current runtime already holds the lease, or the lease is expired. - if the entity was processed, advance state, free the lease

- if the entity was not processed, free the lease

That way, each replica of the control plane holds an exclusive lock for a particular entity while it is trying to

process and advance its state.

EDC uses JSON-LD serialization on API ingress and egress. For information about this can be found in this

chapter, but the TL;DR is that it is necessary because of extensible properties and

namespaces on wire-level DTOs.

2.1 Basic Serialization and Deserialization

On API ingress and egress this means that conventional serialization and deserialization (“SerDes”) cannot be achieved

with Jackson, because Jackson operates on a configurable, but ultimately rigid schema.

For that reason, EDC implements its own SerDes layer, called “transformers”. The common base class for all transformers

is the AbstractJsonLdTransformer<I,O> and the naming convention is JsonObject[To|From]<Entity>Transformer for

example JsonObjectToAssetTransformer. They typically come in pairs, to enable both serialization and deserialization.

Another rule is that the entity class must contain the fully-qualified (expanded) property names as constants and

typical programming patterns are:

- deserialization: transformers contain a

switch statement that parses the property names and populates the entity’s

builder. - serialization: transformers simply construct the

JsonObject based on the properties of the entity using a

JsonObjectBuilder

2.1 Transformer context

Many entities in EDC are complex objects that contain other complex objects. For example, a ContractDefinition

contains the asset selector, which is a List<Criterion>. However, a Criterion is also used in a QuerySpec, so it

makes sense to extract its deserialization into a dedicated transformer. So when the

JsonObjectFromContractDefinitionTransformer encounters the asset selector property in the JSON structure, it delegates

its deserialization back to the TransformerContext, which holds a global list of type transformers (

TypeTransformerRegistry).

As a general rule of thumb, a transformer should only deserialize first-order properties, and nested complex objects

should be delegated back to the TransformerContext.

Every module that contains a type transformer should register it with the TypeTransformerRegistry in its accompanying

extension:

@Inject

private TypeTransformerRegistry typeTransformerRegistry;

@Override

public void initialize(ServiceExtensionContext context) {

typeTransformerRegistry.register(new JsonObjectToYourEntityTransformer());

}

One might encounter situations, where different serialization formats are required for the same entity, for example

DataAddress objects are serialized differently on

the Signaling API and

the DSP API.

If we would simply register both transformers with the transformer registry, the second registration would overwrite the

first, because both transformers have the same input and output types:

public class JsonObjectFromDataAddressTransformer extends AbstractJsonLdTransformer<DataAddress, JsonObject> {

//...

}

public class JsonObjectFromDataAddressDspaceTransformer extends AbstractJsonLdTransformer<DataAddress, JsonObject> {

//...

}

Consequently, all DataAddress objects would get serialized in the same way.

To overcome this limitation, EDC has the concept of segmented transformer registries, where the segment is defined by

a string called a “context”:

@Inject

private TypeTransformerRegistry typeTransformerRegistry;

@Override

public void initialize(ServiceExtensionContext context) {

var signalingApiRegistry = typeTransformerRegistry.forContext("signaling-api");